Last June, Tigera announced a first for Kubernetes: supporting open-source WireGuard for encrypting data in transit within your cluster. We never like to sit still, so we have been working hard on some exciting new features for this technology, the first of which is support for WireGuard on AKS using the Azure CNI.

First a short recap about what WireGuard is, and how we use it in Calico.

What is WireGuard?

WireGuard is a VPN technology available in the Linux kernel since version 5.6 and is positioned as an alternative to IPsec and OpenVPN. It aims to be faster, simpler, leaner and more useful. This is manifested in WireGuard taking an opinionated stance on the configurability of supported ciphers and algorithms to reduce the attack surface and auditability of the technology. It is simple to configure with standard Linux networking commands, and it is only approximately 4,000 lines of code, making it easy to read, understand, and audit.

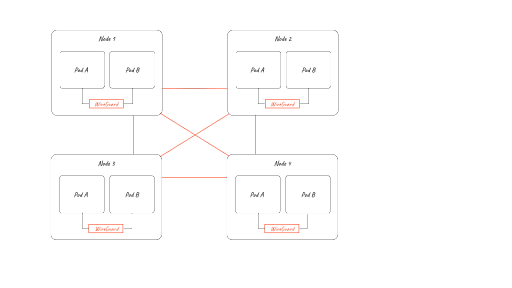

While WireGuard is a VPN technology and is typically thought of as client/server, it can be configured and used equally effectively in a peer-to-peer mesh architecture, which is how we designed our solution at Tigera to work in Kubernetes. Using Calico, all nodes with WireGuard enabled will peer with each other, forming an encrypted mesh. We even support traffic still flowing with a cluster that has WireGuard on some nodes and not on others.

Our goal with leveraging WireGuard was not to compromise—we wanted to offer the simplest, most secure, and fastest way to encrypt data in transit in a Kubernetes cluster without needing mTLS or IPsec, or complicated configuration requirements. In fact, you can think of WireGuard as just another overlay, with the added benefit of encryption.

The user simply enables WireGuard with one command and Calico looks after everything else, including:

- Creating the WireGuard network interface on each node

- Calculating and programming the optimum MTU

- Creating the WireGuard public/private key pair for each node

- Adding the public key to each node resource for sharing across the cluster

- Programming the peers for each node

- Programming the IP route, IP tables, and routing tables with firewall classifier marks (fwmarks) to handle routing correctly on each node

You just specify the intent; the cluster does everything else.

Packet flow with WireGuard explained

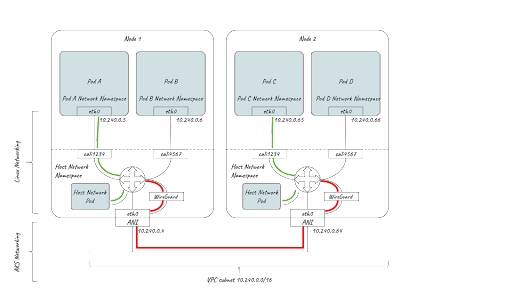

The diagram below shows the various packet flow scenarios in a cluster with WireGuard enabled.

Pods on the same host:

- Packets are routed to the WireGuard table

- If the target IP is a pod on the same host, then Calico will have inserted a “throw” entry in the WireGuard routing table directing the packet back to the main routing table. There the packet will be directed to the interface on the host side of the veth pair for the target pod and it will flow unencrypted (shown in green on the diagram).

Pods on different nodes:

- Packets are directed to the WireGuard table

- A routing entry is matched for the destination pod IP and sent to the WireGuard device cali.wireguard

- WireGuard device encrypts (shown in red on the diagram) and encapsulates the packet and sets a fwmark to prevent routing loops

- WireGuard device encrypts the packet with the public key of the peer it matches for the destination podIP (allowed IP), encapsulates it in UDP, and marks it with a special fwmark to prevent routing loops

- The packet is sent through eth0 to the destination node where the reverse happen

- This also works for host traffic (for example, host-networked pods)

In the following animation, you can see 3 flows:

- Pod-to-pod traffic on the same host flows unencrypted

- Pod-to-pod traffic on different hosts flows encrypted

- Host-to-host traffic also flows encrypted

AKS support

AKS with the Azure CNI presents some really interesting challenges for WireGuard support.

First of all, using the Azure CNI means IP addresses for pods are not allocated using Calico IPAM and CIDR blocks. Instead they are allocated from the underlying VNET in the same way as node IPs. This proves an interesting challenge for WireGuard routing where before we could add a CIDR block to the AllowedIPs list in the WireGuard configuration. In contrast, we now have to write out a list of all pod IPs for that node. This requires Calico to have a configuration setting for routeSource set to workloadIPs. If you are using our operator to deploy to AKS, this is already handled for you.

Using the excellent wg utility from wireguard-tools, here you can see a list of allowed IPs for each peer that would include the set of pod IPs for each peer as well as the host IP (note the endpoint IP is also in the allowed IP list). On AKS this provides workload encryption and also host-to-host encryption.

interface: wireguard.cali public key: bbcKpAY+Q9VpmIRLT+yPaaOALxqnonxBuk5LRlvKClA= private key: (hidden) listening port: 51820 fwmark: 0x100000 peer: /r0PzTX6F0ZrW9ExPQE8zou2rh1vb20IU6SrXMiKImw= endpoint: 10.240.0.64:51820 allowed ips: 10.240.0.64/32, 10.240.0.65/32, 10.240.0.66/32 latest handshake: 11 seconds ago transfer: 1.17 MiB received, 3.04 MiB sent peer: QfUXYghyJWDcy+xLW0o+xJVsQhurVNdqtbstTsdOp20= endpoint: 10.240.0.4:51820 allowed ips: 10.240.0.4/32, 10.240.0.5/32, 10.240.0.6/32 latest handshake: 46 seconds ago transfer: 83.48 KiB received, 365.77 KiB sent

The second challenge is handling the correct MTU. Azure sets an MTU of 1500 and WireGuard sets a DF (Don’t Fragment) mark on the packets. Without adjusting the WireGuard MTU correctly, we see packet loss and low bandwidth with WireGuard enabled. We can solve this by auto-detecting AKS in Calico and setting the correct overhead and MTU for WireGuard.

We can also add the node IP itself as an allowed IP for each peer, and handle host-networked pods and host-to-host communication all over WireGuard in AKS. The trick with host-to-host is to get the response to route back through the WireGuard interface when RPF (Reverse Path Forwarding) occurs. We can solve this by setting a mark on the packet on the destination node for incoming packets, and then configuring the kernel to respect that mark for RPF in sysctl.

Now on AKS you have full support for workload and host encryption between nodes. You just specify the intent; the cluster does everything else.

If you enjoyed this article, you might also like:

- Calico Cloud on Azure Marketplace

- Get started with Calico on AKS and Azure

- Free, self-paced Calico certification course

- Free, online webinars, workshops, and resources

- Learn about Calico Cloud

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!