I read about “The Law of Leaky Abstractions”, famously coined by Joel Spolsky in 2002, when I was still a young programmer. The wisdom imparted by this simple law stresses the importance of understanding, at least the basics of, the moving components in a software stack both above and below the abstraction within which you are working. Abstractions are far from perfect and this broader knowledge of the implementation of layers of your working system can help you effectively address edge cases and error conditions, and in some cases, optimize some aspect of your design.

This is the second installment of a three part series describing how Project Calico can be used to secure a host’s endpoint.

- Part 1: What is a host endpoint and how is it different than a workload endpoint?

- Part 2: How do I write policies that match my intended traffic?

- Part 3: Securing endpoints in Kubernetes

Filtering and Transforming Packets with iptables

To design policies that accurately match traffic entering and leaving your hosts, it is important to understand how and where Project Calico matches packets before and after they traverse a host’s interface(s). Additionally, if you are using Kubernetes and its service abstraction managed by kube-proxy, you need to be aware of which address is being filtered. Both of these abstractions, enforcing network policy and transforming service virtual IPs into concrete workload destinations, use iptables to effect changes to normal packet flow and can produce obscure filtering results without an understanding of how they interact.

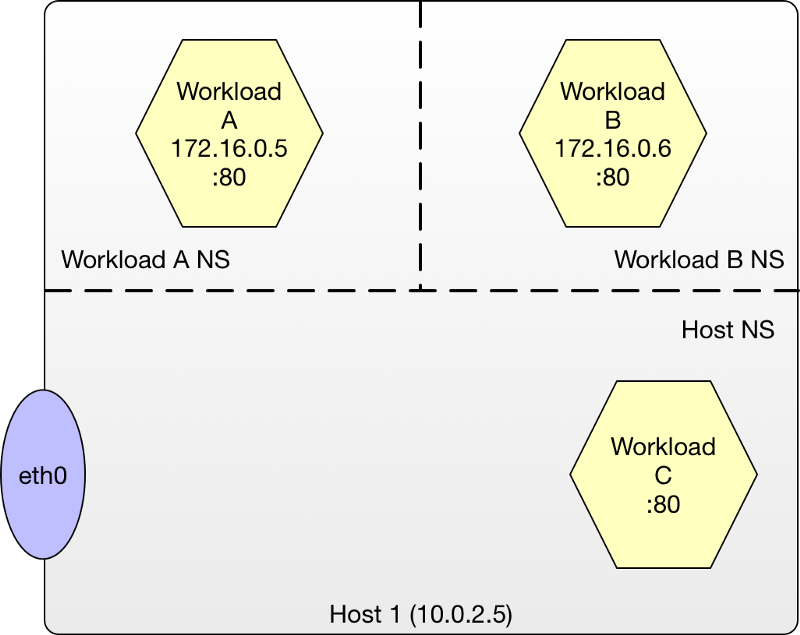

Imagine we have a Kubernetes worker node that is hosting two container workloads, A and B each in their respective network namespace, and another service in the host namespace, workload C. Workloads A and B are directly addressable but require a physical network connection on the host to forward packets to them. Look back to part one if you need a refresher on container connectivity.

To get a better understanding of what is happening, let’s progressively take a look at how policy processing works as we apply Project Calico policies and profiles to a host and eventually virtualized workloads.

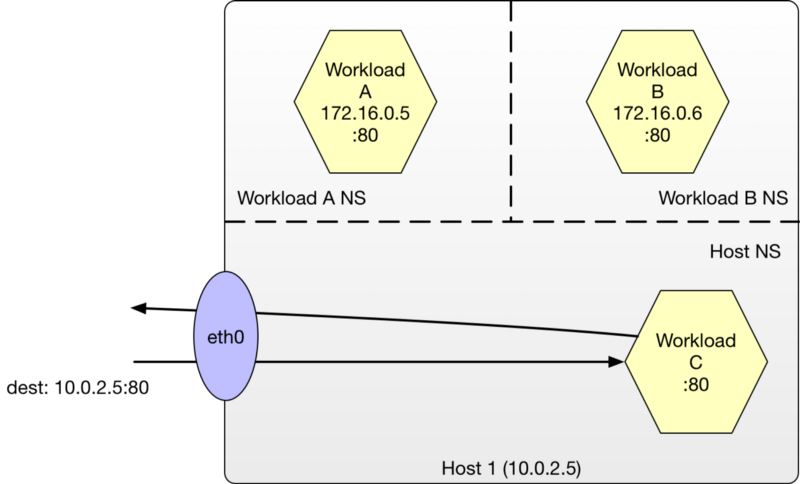

Simple Host Workload Communication

The simplest path involves a requestor sending packets to a host port on the target node to which a host process, in our case Workload C, is attached and listening on. If no policies have been applied, packets entering the host are delivered to the workload unimpeded and returned in the same manner.

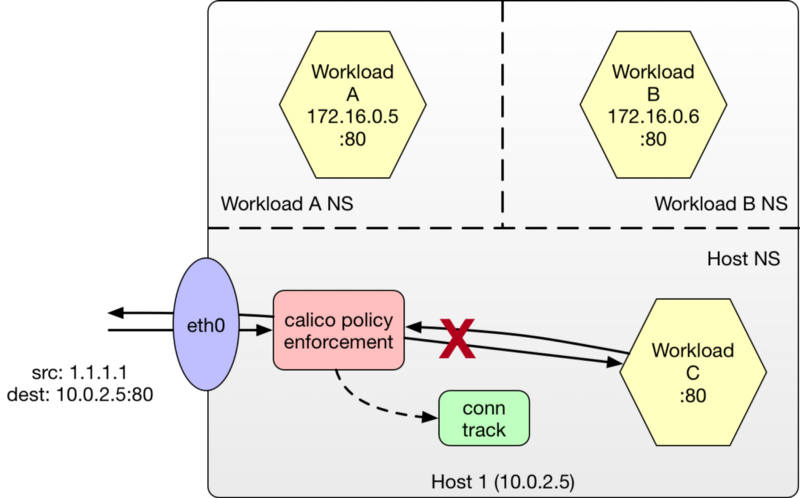

Introducing a Host Endpoint and Policy

Let’s make things more interesting by introducing a Calico HostEndpoint and introducing a policy. We first specify that we want to evaluate policies against the host endpoint (eth0) whenever the label nodetype == worker matches in a policy.

apiVersion: v1

kind: hostEndpoint

metadata:

name: eth0

node: host1

labels:

nodetype: worker

spec:

interfaceName: eth0

Next we specify a policy that protects Workload C from being accessed by any node outside our cluster.

apiVersion: v1

kind: policy

metadata:

name: deny-host-access-on-worker

spec:

selector: nodetype == 'worker'

types:

- ingress

- egress

ingress:

- action: deny

source:

notNets:

- '10.0.2.0/24'

egress:

- action: allow

We are now effectively blocking access to Workload C from any node not within our cluster.

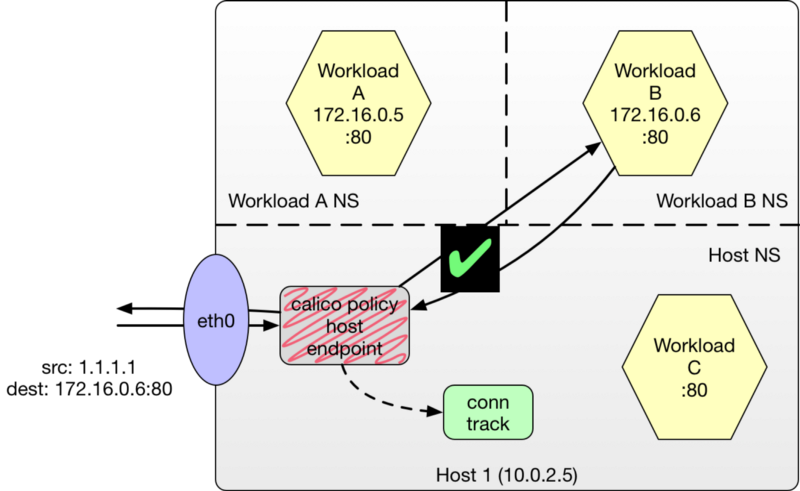

Exposing Hosted Workloads Despite HostEndpoint Policies

What if, we wish to expose workloads A and B outside the cluster. We’re assuming we have exchanged the appropriate routes with the underlying fabric to enable reachability for these workloads beyond the cluster. We have a slight problem now. We just introduced a policy that blocked all traffic to the host endpoint from all external cluster traffic. If all traffic is blocked, how is traffic destined for our workloads supposed to transit eth0 en route?

Project Calico is aware of this need and skips the host endpoint policy evaluation for traffic destined for the workload. This ensures that host endpoints do not interfere with normal workload traffic and forwarding can proceed normally.

We can of course specify policies to protect each workload endpoint as well, but for this post, let’s continue to focus on the host endpoints (for more information on setting policies, see the documentation on Policy Resources).

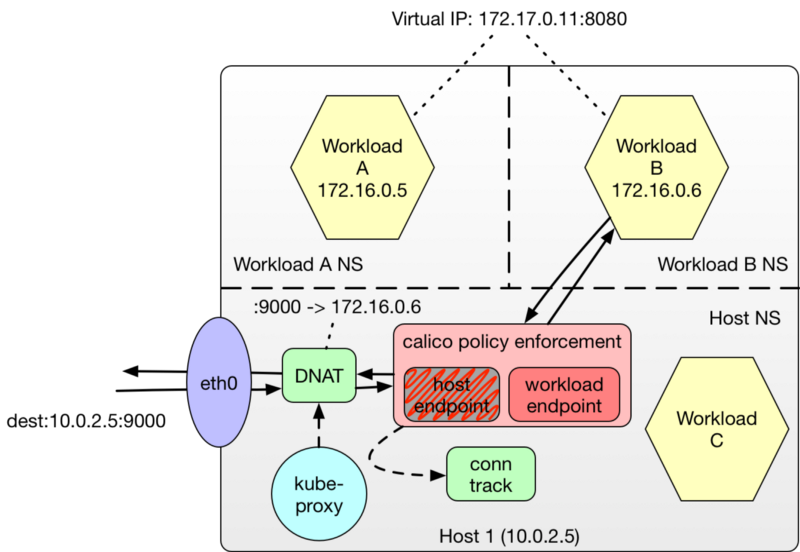

kube-proxy and DNAT Side-effects

In an elastic environment, it is useful to address a set of scaled micro-services as a single service and let the underlying orchestrator handle the load-balancing. As mentioned previously, Kubernetes supports this feature with kube-proxy. As services and endpoints are modified, kube-proxy tracks these changes and writes DNAT rules into the kernel using iptables. These rules transform the destination address from a service IP into a concrete workload IP and memorize the return path lookup using the connection tracking table. This translation is only available to nodes participating in the calico network or if an anycast VIP has been manually advertised to target a calico network node.

A more common use case is an external source, such as a hardware load-balancer, needing a way to address a service. kube-proxy provides a NodePort feature that maps a single or multiple host ports to a service.

In either case, it’s important to understand which destination address the policy is being applied to.

One might intuitively think that implementing a Host Endpoint Policy for the NodePort binding on the host is sufficient to govern access to all the workloads backing the service. Unfortunately, the Linux kernel performs the DNAT on the address before Host Endpoint Policies are evaluated. That means that Project Calico detects a packet destined for a workload (not the host endpoint) and skips any enforcement of Host Endpoint policies.

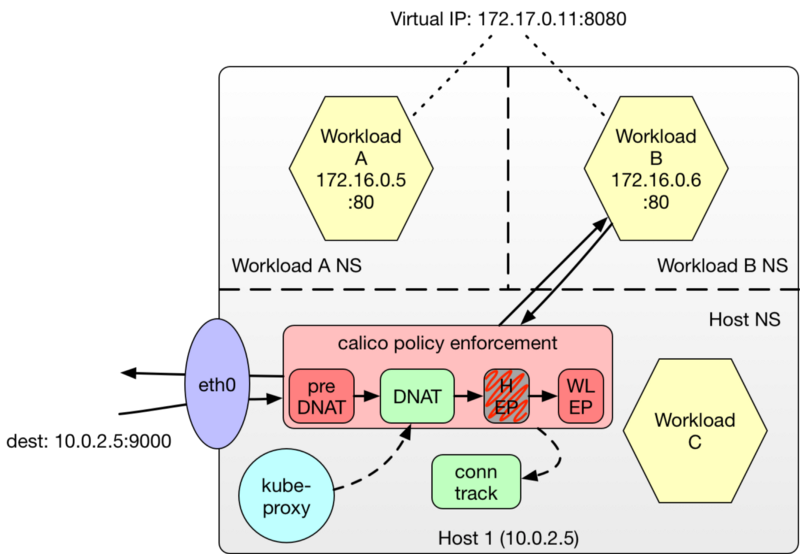

This means we must specify our policy for the Host Endpoint bound NodePort before any DNAT translation occurs!

With a preDNAT policy, it is now possible to govern access to services exposed as NodePorts in a Kubernetes cluster. The following policy denies all access to host endpoints except for nodes within the node and pod networks. Additional exceptions could be made for hardware load-balancers and other external services needing to consume the services mapped to the NodePorts.

- apiVersion: v1

kind: policy

metadata:

name: allow-cluster-internal-ingress

spec:

order: 10

preDNAT: true

ingress:

- action: allow

source:

nets: [10.0.2.0/24, 172.16.0.0/16]

selector: has(host-endpoint)

- apiVersion: v1

kind: policy

metadata:

name: drop-other-ingress

spec:

order: 20

preDNAT: true

ingress:

- action: deny

selector: has(host-endpoint)

preDNAT Constraints

Before concluding, let me make you aware of some constraints when using the preDNAT feature.

- Currently, preDNAT is only supported when using Calico in its etcd datastore mode (i.e. not with the Kubernetes API datastore driver a.k.a. kdd mode).

- Only ingress policies are supported since the destination address has not been determined at the time of policy evaluation.

- Policies allowing untracked traffic (such as UDP packets targeting memcache) skip over both preDNAT and normal policies.

- If policy changes are made, it may be necessary to clear the connection tracking table using something similar to:

conntrack -D -p tcp — orig-port-dst 9000

For additional documentation on HostEndpoint Policies and preDNAT, please see http://docs.projectcalico.org/latest/getting-started/bare-metal/bare-metal#pre-dnat-policy.

You now have the tools to tame the evaluation order of the iptables abstraction when evaluating network policy. Next time, we’ll use these new features to protect the Kubernetes control plane.

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!