We are excited to announce the general availability of Tigera Secure 2.5. With this release, security teams can now create and enforce security controls for Kubernetes using their existing firewall manager.

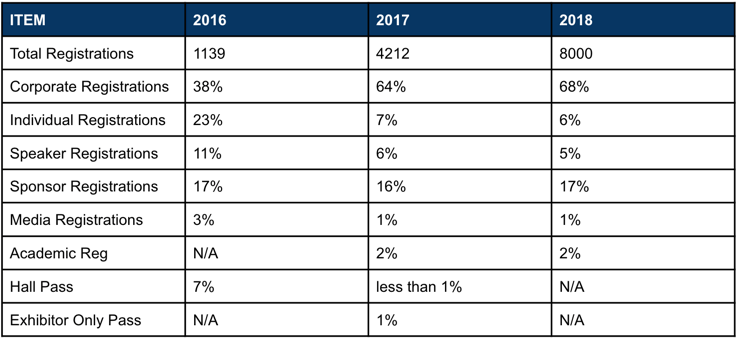

Containers and Kubernetes adoption are gaining momentum in enterprise organizations. Gartner estimates that 30% of organizations are running containerized applications today, and they expect that number to grow to 75% by 2022. That’s tremendous growth considering the size and complexity of enterprise IT organizations. It’s difficult to put exact metrics on the growth in Kubernetes adoption; however, KubeCon North America attendance is a good proxy. KubeCon NA registrations grew from 1,139 in 2016 to over 8,000 in 2018 and are expected to surpass 12,000 this December, and the distribution of Corporate Registrations has increased dramatically.

Despite this growth, Kubernetes is a tiny percentage of the overall estate the security team needs to manage; sometimes less than 1% of total workloads. Security teams are stretched thin and understaffed, so it’s no surprise that they don’t have time to learn the nuances of Kubernetes and rethink their security architecture, workflow, and tools for just a handful of applications. That leads to stalled deployments and considerable friction between the application, infrastructure, and security teams. These problems would go away if the security team’s existing tools and processes would work for Kubernetes.

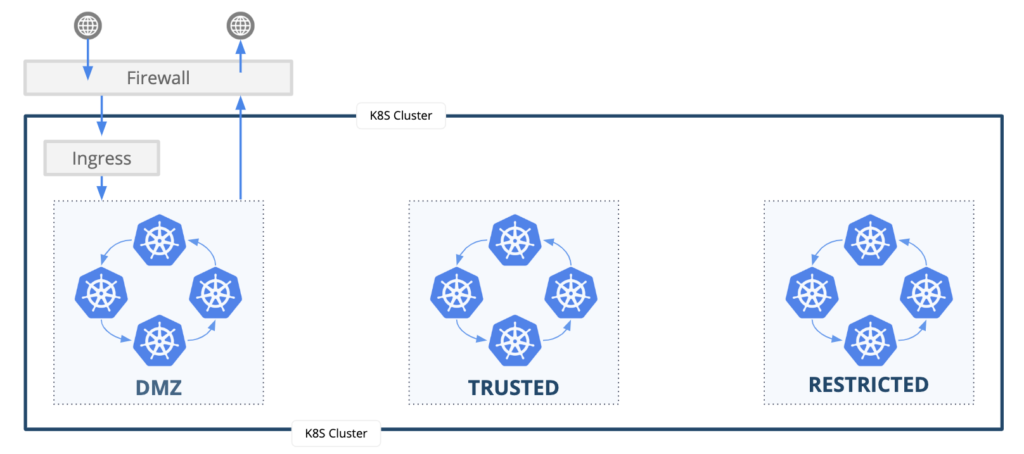

Why the friction? A lot of it stems from the security architecture that security teams use to manage risk; specifically, a zone-based architecture. Security teams classify IT assets into risk categories that are then deployed to segmented zones. There is generally a demilitarized zone (DMZ) where workloads allow connections from the internet (the “untrusted zone”). Workloads that do not allow inbound internet connections are placed in other zones based on the risk profile of that workload or IT asset. For example, a database containing personally identifiable information (PII) would never be placed in a DMZ where internet connections could potentially access the data; it would be placed in a restricted zone where very few white-listed workloads behind a firewall are allowed to connect.

The security team’s security policies, compliance requirements, and workflow revolve around this architecture, which has been successfully used for decades, and Kubernetes doesn’t fit in that architecture. The challenge is that Kubernetes workloads are dynamic and ephemeral; they are short-lived, with constantly changing locations and IP addresses that are unpredictable. Firewalls, VLANs, and cloud-native VPCs & security groups cannot be used with Kubernetes to implement the zone-based architecture the security team relies on.

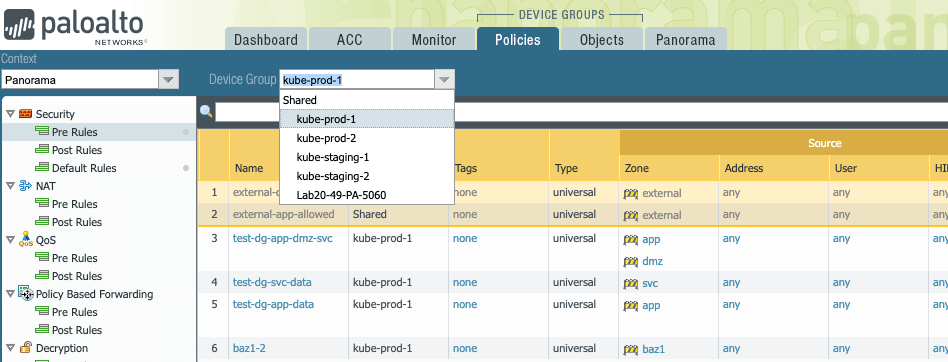

Tigera Secure 2.5 integrates with the security team’s firewall manager to implement their security controls in Kubernetes. Palo Alto Panorama is the first firewall manager we support. Here’s how it works.

- Kubernetes clusters appear as Device Groups in the Panorama interface.

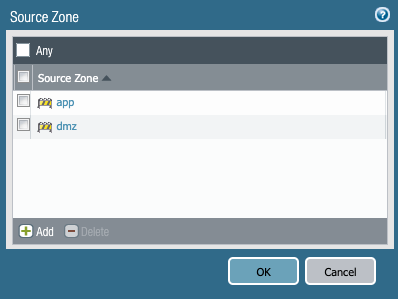

- The firewall administrator uses Panorama to define their security zones. Tigera Secure automatically creates a zone-based architecture within Kubernetes. By default, workloads are allowed to communicate within each zone but not between zones.

- Kubernetes workloads are deployed to the zone architecture using a label to identify the zone where each workload belongs. Workloads are deployed to a “default” zone when a zone label is missing.

- The firewall administrator defines which workloads can traverse from one zone to another. The Panorama “app ID” is mapped to the Kubernetes pod name.

- Tigera Secure enforces security policies, logs traffic, and sends alerts to the SIEM platform.

The firewall manager creates security policies in a higher precedent tier that cannot be tampered with or overridden by developers deploying their pods and Network Policies. That enables the security team to define their higher-level controls while providing guardrails that allows the DevSecOps toolchain to automate deployments without requiring a manual firewall change request.

Tigera Secure 2.5 also includes several other exciting new capabilities described below.

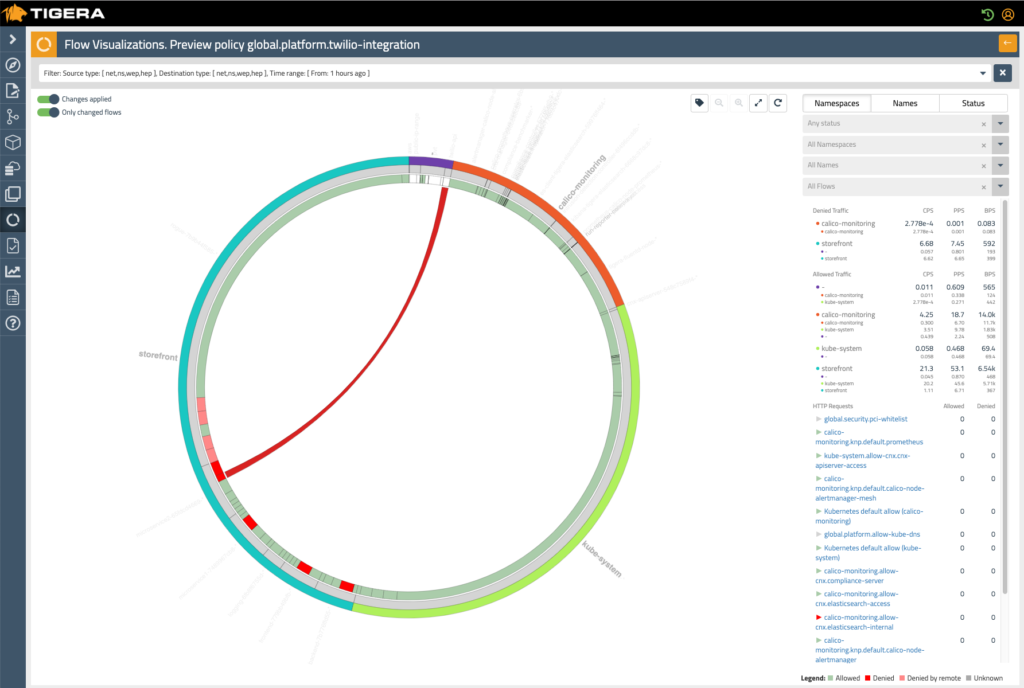

Policy Preview Mode

Kubernetes Network Policies are excellent because they enable you to define fine-grained rules between pods. As your cluster scales, reviewing changes to your Network Policies becomes an incredibly complex and time-intensive task. One small typo could bring down connectivity within your entire cluster.

Policy Preview Mode enables you to define changes to your Kubernetes Network Policies and preview the effects of those changes before committing and enforcing them. It eliminates the risk related to making Network Policy changes.

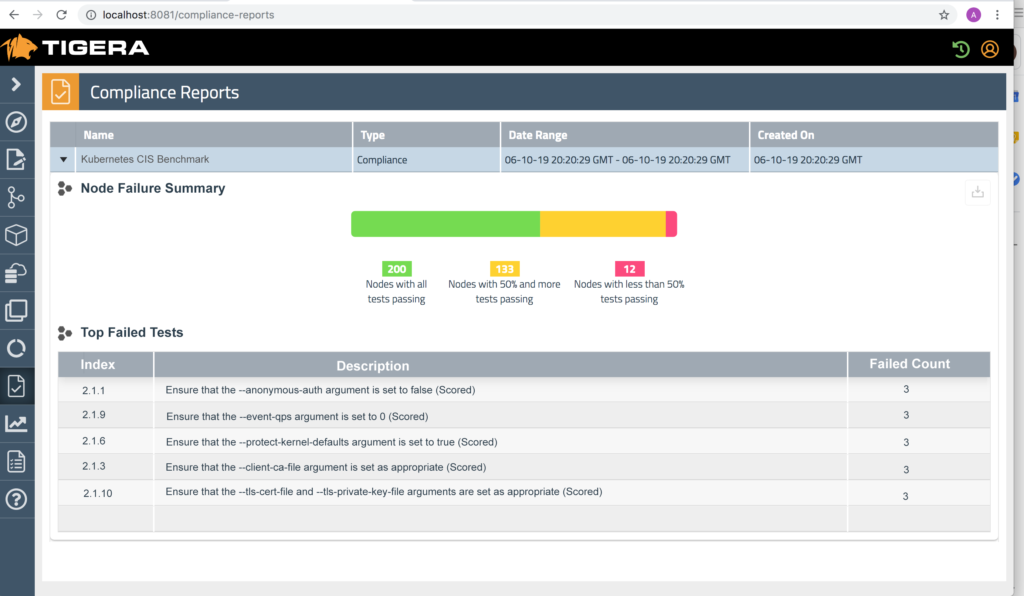

Security Configuration Monitoring and Compliance

A misconfigured Kubernetes deployment can be exploited by an attacker to gain access to your cluster. Tigera’s Security Configuration Compliance continuously monitors, reports, and alerts on security-related configuration issues based on the Center for Internet Security (CIS) Kubernetes Benchmark. Tigera’s Anomaly Detection logs indicators of compromise with a link to the point-in-time Kubernetes configuration.

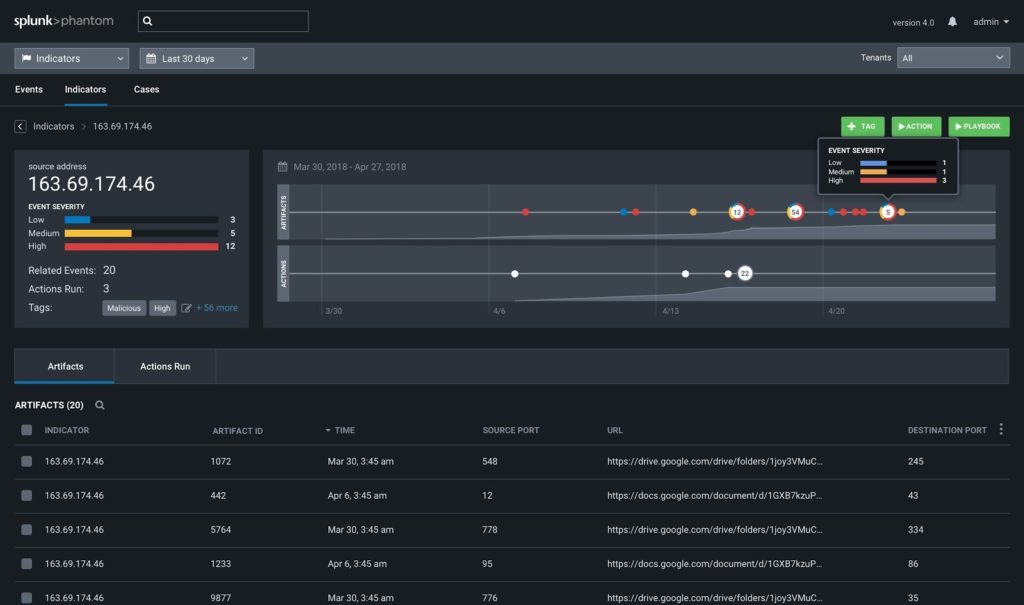

Splunk Integration

The security operations center (SOC) uses a Security Incident and Event Management (SIEM) platform to collect, categorize, and prioritize security alerts. Tigera Secure can be configured to integrate with most SIEM tools. With Tigera Secure 2.5, we have an out-of-the-box integration with Splunk; enabling security teams to use their existing tools and processes to manage both traditional and modern Kubernetes workloads.

Ingress Flow Logs

There are a few challenges when dealing with traffic flowing into a Kubernetes cluster.

- The firewall forwards all ingress traffic to an Ingress Controller in most Kubernetes architectures. The Ingress Controller routes all traffic to that single destination, and the firewall does not have visibility into the actual destination of inbound traffic.

- The Ingress Controller then routes the inbound request to the correct pod. However, the source of the connection is the Ingress Controller; losing the correct source IP.

- Without knowing the actual source or destination, it is difficult to mitigate an attack.

Tigera Flow Logs contain accurate source and destination data as well as Kubernetes context (namespace, pod, labels, policies, status) for all inbound, outbound, and east-west traffic. You get an accurate view of all network flows and their actual sources and destinations.

Want to Learn More?

If you would like to check out a live demo:

- Stop by the Tigera booth (#665) at Black Hat

- Not at Black Hat? Schedule a live demo

- Have questions? Click the online chat bubble on the lower-right side of this page to speak with a Tigera representative.

————————————————-

Free Online Training

Access Live and On-Demand Kubernetes Tutorials

Calico Enterprise – Free Trial

Solve Common Kubernetes Roadblocks and Advance Your Enterprise Adoption

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!