In this blog post, I will be looking at 10 best practices for Kubernetes security policy design. Application modernization is a strategic initiative that changes the way enterprises are doing business. The journey requires a significant investment in people, processes, and technology in order to achieve the desired business outcomes of accelerating the pace of innovation, optimizing cost, and improving an enterprise’s overall security posture. It is crucial to establish the right foundation from the beginning, to avoid the high cost of re-architecture. Developing a standard and scalable security design for your Kubernetes environment helps establish the framework for implementing the necessary checks, enforcement, and visibility to enable your strategic business objectives.

High-level design

Building a scalable Kubernetes security policy design requires that you adopt a fully cloud-native mindset that takes into account how your streamlined CI/CD process should enable security policy provisioning. A sound design would enable your enforcement and policy provisioning requirements in Day-N, while accommodating Day-1 requirements. The following list summarizes the fundamental requirements that a cloud-native security policy design should include:

- Segmentation of the control, management, and data planes

- Segmentation of deployment environments

- Multi-tenancy controls

- Application microsegmentation

- Cluster hardening

- Ingress access control

- Egress access control

- Role-based access control (RBAC)

- Security governance

- Security policy standardization and centralization

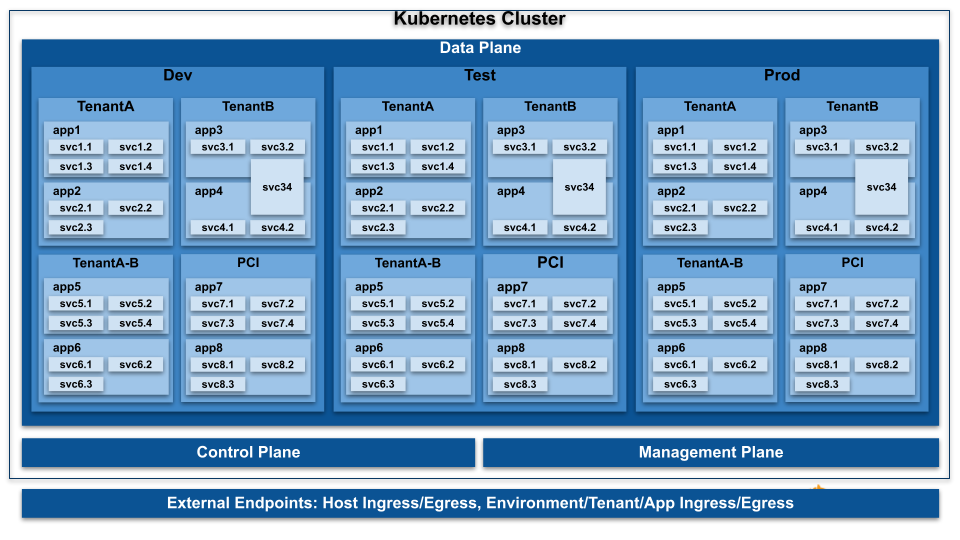

The below graphic depicts a logical design implementing granular segmentation and common use cases that organizations are typically adopting. Developing a similar diagram helps you clearly represent your security requirements and serves as a framework for the implementation of your design.

Let’s take a look at the above-mentioned fundamental requirements for Kubernetes security policy design, and best practices for each.

#1: Segmentation of the control, management, and data planes

Let’s start with the definition of data, control, and management planes in a Kubernetes environment:

- Data plane services are the business applications that are deployed in the environment

- The control plane includes the platform applications and services that collectively deliver the platform components

- The management plane includes the services used for managing and monitoring hosts and control plane application

The segmentation requirements come from the distinctive roles each plane plays in delivering services in a Kubernetes environment. It is also a common requirement for security compliance to separate the control, management, and data plane to avoid vulnerabilities cascading from one plane to another. The control plane is vital for the availability of the platform and typically involves host service or services backed by pods with host networking. The management plane is critical for operations to monitor and configure the platform to deliver its intended use cases. The data plane hosts applications that are essential for business operation.

Recommendations:

- Develop a Kubernetes security policy design that isolates the control, management, and data planes.

- Implement granular segmentation of external endpoints requiring access to control, management, or data planes.

#2: Segmentation of deployment environments

Some organizations choose to have dedicated clusters for different deployment environments (e.g. dev, test, prod). Others choose to have shared clusters for multiple deployment environments to meet their requirements or constraints (e.g. shared dev and test cluster). In all cases, it is required to maintain segmentation of different deployment environments.

Recommendation:

- Build a policy design that can accommodate deploying applications in both shared and dedicated clusters.

#3: Multi-tenancy controls

Multi-tenancy is a broad term that represents, from the point of view of Kubernetes security policy, multiple tenants securely sharing the same platform. Depending on your organization, a tenant may represent any of the following:

- Business unit

- Functional team

- Control, management, and data plane

- Compliance environment

- Deployment environment (e.g. dev, test, prod)

- Different personas with different security control requirements (e.g. security team, platform team, developers)

A sound Kubernetes security policy design should be capable of accommodating all of your organization’s current multi-tenancy requirements, and should scale as your deployment environments scale. The trust boundaries of a tenant are not alway sharp. Your design should also be capable of accommodating “exceptions” to the high-level multi-tenancy rule. A shared service, such as a database that spans multiple applications within the context of one tenant or multiple tenants, is an example of “exception” to the multi-tenancy rule.

Recommendations:

- Develop a hierarchical design that can meet your multi-tenancy use cases and applies the principle of least privilege (PoLP).

- Implement multi-tenancy policies that have cluster-wide scope (apply to all namespaces and all pods).

- Delegate policy implementation and enforcement to the right persona in your organization (e.g. security team is responsible for implementing and approving the multi-tenancy and compliance policies).

- Centralize ingress and egress external access controls for all applications.

- Ensure proper integration with your legacy infrastructure or cloud environment.

- Determine your ingress/egress policy strategy based on complexity, performance, and desired security posture tradeoffs.

- Design to achieve native compliance of applications.

#4: Application microsegmentation controls

Application microsegmentation policies should implement controls in alignment with the microservices communication map. In a fully streamlined CI/CD pipeline, application microsegmentation controls can be delegated to developers to implement them as code alongside the application manifests. A hierarchical multi-tenancy design can ensure that those granular controls are in compliance with the high-level enterprise security policies at all times. This can be achieved by delegating controls for flows respecting the top of funnel controls and denying everything else. Microsegmentation controls should extend beyond Layer 4 policies to include more context about microservices communication, including matching the service accounts (and implicitly the associated certificates) and Layer 7 attributes. This effectively provides not only a zero-trust policy model but also native compliance.

Defining the application trust boundary is essential for implementing application microsegmentation. Organizations typically require segmentation of applications while allowing the use case of shared services. Developers should also have the autonomy to provision policies within the context of their authorized namespace(s).

Recommendations:

- Implement granular ingress and egress controls for your application microservices.

- Include additional context to your policies definition, such as service accounts and Layer 7 attributes, to add depth to your attribution and enforcement.

- Ensure your applications respect the multi-tenancy rules and label standard through governance control in your CI/CD pipeline or at runtime.

- Implement one namespace per application model.

- If applications share a shared service such as a database, implement one namespace per shared service to be accessed by multiple applications, in addition to the application namespace.

#5: Cluster hardening

Cluster hardening is a broad topic that can include a broad set of controls and considerations. From a Kubernetes security policy perspective, cluster hardening includes the controls required to secure the control and management planes, which are mission-critical for ensuring the availability of your business applications. A compromised application pod can be contained in its own environment. However, a compromised host can impact your whole business. Therefore, it is crucial for your Kubernetes security policy design to account for control plane and management plane security in a cloud-native approach. This requires the implementation of policies securing the host, pods with host networking, and pods with cluster classless inter-domain routing (CIDR).

Recommendations:

- Define host endpoints to enable the definition of policies applied to hosts and pods with host networking.

- Implement high-level multi-tenancy for management, control, and data planes.

- Implement granular microsegmentation controls for management traffic.

- Implement granular microsegmentation controls for control plane traffic (e.g. kubelet, etcd, Calico node).

#6: Ingress access control

Ingress security policies allow for implementing granular north-south controls for application ingress communications. Exposing Kubernetes services is your mechanism for making your Kubernetes applications available for external access by your organization, partners, or the internet. There are multiple methods for exposing your services, including:

- Using Kubernetes nodeports or ingress

- Using a service of type LoadBalancer

Depending on your design, there is a potential of source or destination network address translation (NAT) happening, which masks the original source or destination of flows. Source traffic can be masked by a load balancer or a proxy natting source IP. Examples include an external load balancer (cloud or on-premises) or a proxy (such as kube-proxy) natting the source IP address. Similarly, the destination IP of the service (or backing pods) can be masked by the host IP, unless you are exposing the service and relying on equal-cost multi-path routing (ECMP) for network load balancing, or exposing the pods and relying on an external load balancer to host the virtual IP and load balance to backing pods.

Irrespective of your approach, your design should implement a standard in securing your exposed services in different environments (cloud and on-premises), taking into account source and destination nating and order of processing (pre- and post-DNAT) for implementing security policies.

Recommendations:

- Implement a standard for exposing applications in different environments.

- Consider the implications of source and destination natting on security policy implementation and design.

- Consider leveraging data plane (such as eBPF) or external load balancer capabilities for source IP preservation where needed.

- Consider destination IP natting and policy order of processing (pre- or post-DNAT) in implementing security policies.

#7: Egress access control

Egress security policies allow for implementing granular north-south (or rather south-north) controls for your applications’ egress communications with the outside world. An essential part of egress access control is the integration with your legacy or cloud environment. Depending on your deployment setup, the source IP for egress connections can be natted to the host IP, masking information about the source that might require enforcement at the perimeter. Some techniques can be implemented to ensure integration with the perimeter, such as implementing an egress gateway for your application with a deterministic IP range or leveraging a controller that automatically updates a perimeter firewall or security group rules.

Egress controls should not only be limited to allowing trusted destinations, but also to denying connections to know malicious destinations at source. It is recommended to centralize and tightly manage egress access controls to optimize the security posture of your cluster.

Recommendations:

- Centralize the implementation of egress security policies.

- Implement fully qualified domain name (FQDN) based egress security policies to ensure scalability and avoid backdoors to dynamic destinations.

- Deny egress access to known malicious destinations using dynamic threat feeds.

- Design your egress security policies to integrate with your perimeter security.

#8: Role-based access control (RBAC)

Separation of duties in Kubernetes security policy definition is often a key requirement in achieving enterprise security and compliance requirements. Different teams, depending on their function in the organization, have their own agenda and often conflicting security requirements. The end goal should be focused on enabling different stakeholders to provision policy as code, in a fully streamlined process that ensures native compliance with your enterprise security requirements.

A common requirement for a variety of organizations of different sizes and in different stages of their Kubernetes journey is to enable different privileges in policy provisioning, based on roles in the organization.

- Security/InfoSec team – Require Kubernetes security policy to be in compliance with the enterprise’s security requirements, including multi-tenancy, external communication, compliance, and threat defense.

- Platform – Require hardening and securing the control and management planes to ensure the integrity of the cluster.

- Developers/DevOps team – Require securing applications, which implies microsegmentation of microservices communication.

Responsibilities for Day-1 are often different from those of Day-N. For example, the platform team might take the responsibilities of developing application microsegmentation policy while their stack matures towards self-service policy provisioning for developers.

Recommendations:

- Implement a Kubernetes security policy design based on the following guidelines:

- Enable different personas in your organization to provision policies based on their privileges.

- Implement a tiered policy hierarchy that allows for defining the scope of policies, controlling precedence, and delegating granular controls to other personas while ensuring compliance with high-level controls.

#9: Security governance

Policy enforcement directly depends on endpoints in a Kubernetes environment carrying the authorized labels. Depending on the policy deployment model (if it’s centralized or decentralized), control over policy implementation may be done early in the CI/CD pipeline in the application manifest repositories, or at deployment time in the Kubernetes cluster.

In a decentralized policy deployment model, developers may have the privileges to deploy applications to the Kubernetes cluster via their own CI/CD pipeline. This gives limited control over validation early in the process. A requirement for this model is to restrict the creation of namespaces and their associated labels. This can ensure compliance based on namespace labels, irrespective of application labels.

A preferred approach for scalability and standardization is adopting a centralized policy deployment model. In this case, different stakeholders may have their own CI tools feeding into repositories for validation and approval, and centralized CD tools would be responsible for deployment of approved policies.

In addition to implementing security checks for label assurance, it is crucial to ensure containers are deployed with the right privileges and have access to authorized resources. Security policies implement controls at the pod and host level, but inherently require that containers are unable to bypass those controls through escalation of privileges, access to unauthorized data, or resource exhaustion. This is an essential component of defense in depth, where multiple security gates are implemented at the container, pod, and host levels.

Recommendations:

- Implement pull request policies to ensure application manifests are implemented with the authorized labels.

- Implement admission controller policies to ensure application manifests deployed are carrying authorized labels.

- Implement admission controller policies for container security, including pod security policies and resource quotas and limits.

- Implement an approval process for labels that grant special access privileges, such as egress access, ingress access, or compliance environment.

#10: Security policy centralization and standardization

A cloud-native application development approach allows for the flexibility of deployment. Your Kubernetes security policy design should provide the same flexibility of applying the same controls to multiple cloud and on-premises clusters. A GitOps approach is a great fit for implementing a centralized Kubernetes security policy model in different environments:

- Policy manifests would reside in centralized repositories where authorized approval is enforced.

- Centralized repositories would include standard policies applicable to all environments, in addition to policies specific to different environments.

- The GitOps operator would be responsible for ensuring policies in all clusters are compliant with your intent and your single point of truth would always be your repositories.

Other approaches can include vendor-specific, multi-cluster management (e.g. Calico multi-cluster management feature) or Kubernetes Cluster Federation (KubeFed).

Recommendations:

- Implement a centralized and standard Kubernetes security policy model.

- Ensure compliance at all times with your intent as defined in your centralized repository.

- Implement RBAC-based review and approval of centralized policies.

If you have not done so, it is highly recommended that you review my article about label standard and best practices for Kubernetes security. It provides a framework for developing a label standard in alignment with your security policy design.

To learn more about new cloud-native approaches for establishing security and observability with Kubernetes, check out the early release of this O’Reilly eBook, authored by Tigera.

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!