At Kubecon 2023 in Amsterdam, Azure made several exciting announcements and introduced a range of updates and new options to Azure-CNI (Azure Container Networking Interface). These changes will help Azure Kubernetes Services (AKS) users to solve some of the pain points that they used to face in previous iterations of Azure-CNI such as IP exhaustion and big cluster deployments with custom IP address management (IPAM). On top of that, with this announcement Microsoft officially added an additional dataplane to the Azure platform.

The big picture

Worker nodes in an AKS (Azure Kubernetes Service) cluster are Azure VMs pre-configured with a version of Kubernetes that has been tested and certified by Azure. These clusters communicate with other Azure resources and external sources (including the internet) via the Azure virtual network (VNet).

Now, let’s delve into the role of the dataplane within this context. The dataplane operations take place within each Kubernetes node. It is responsible for handling the communication between your workloads, and cluster resources. By default, an AKS cluster is configured to utilize the Azure dataplane, which under the hood uses IPtables and Linux standard routing. While the Linux standard routing is an excellent choice of dataplane that has been battle tested throughout the years and companies like Open AI depend on it to navigate the massive number of users their AI models need to serve every second. there are certain areas that the standard Linux routing will generate unnecessary overhead, areas such as observability, and tracing.

The new announcement of Azure-CNI also packs an official port of the Cilium eBPF dataplane that can be used at the time of cluster creation. Similar to the Calico eBPF dataplane which is also available for AKS and all other major cloud providers, it offers faster service routing and efficient network policy enforcement. The eBPF technology has gained significant traction in the industry, with companies like Cloudflare and Datadog relying on eBPF programs to power their services and tap into the kernel to gain a better understanding of the applications inner workings. eBPF is a safe, quick, and effective way to interact with the Linux kernel to achieve tasks such as packet processing and many more.

In this blog post, we will go through some of these improvements and examine the benefits that they provide in your AKS cloud-native journey.

AKS networking

AKS is a managed Kubernetes service that allows you to utilize the infrastructure of Azure data centers to run your applications in the cloud. This allows you to swiftly distribute your workloads across various locations worldwide, ensuring optimal performance and availability without the need to invest in running, and maintaining your own physical data centers.

In Kubernetes, network capabilities are delegated to the Container Networking Interface (CNI) via Container Runtime Interface (CRI) on each node. AKS offers multiple networking configurations that can be used to set up networking in a cluster.

- Kubenet (Basic networking)

- Azure-CNI (Advanced networking)

- Bring Your Own CNI

While both these options can be used to implement networking for your AKS cluster their feature set and capabilities are different from one another.

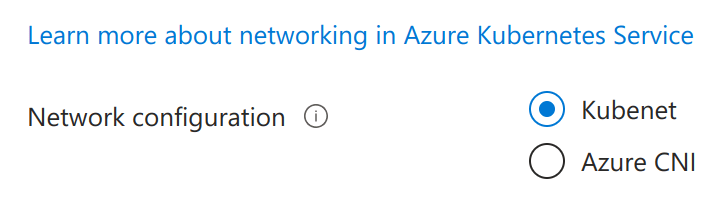

The following picture illustrates your AKS networking choices:

Kubenet (Basic networking)

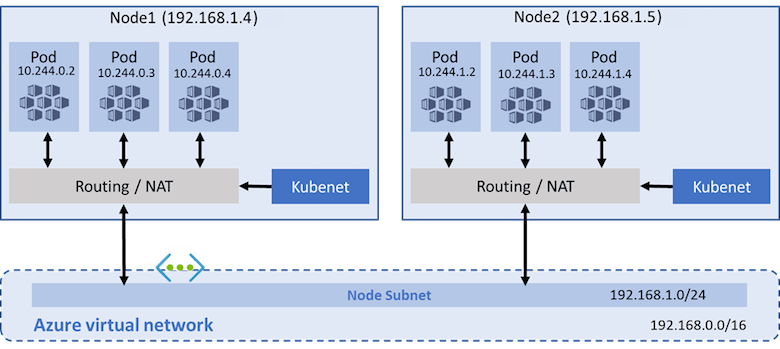

In Kubenet mode, the nodes in an AKS cluster are connected to the Azure Virtual Network (VNet) and acquire their IP addresses from the Azure infrastructure. Pods on the other hand communicate with a local IPAM to acquire IP addresses. This means that Azure VNet is not aware of the Pod IP CIDR (Classless Inter-Domain Routing) by default.

The following image illustrates the architecture of Kubenet mode:

As a result, pods running on different nodes within the AKS cluster cannot directly communicate with each other.

To address this situation, a User Defined Route (UDR) is required. A UDR is a route entry that is associated with a subnet within Azure VNet. By configuring a UDR, you can define specific routes and enable communication between pods in different nodes.

It’s worth noting that there is a maximum limit of 400 UDRs that can be configured within an Azure VNet, and Kubenet offers no support for Windows nodes. At the time of cluster creation, you can use --pod-cidr or --pod-cirds arguments (with Azure-CLI or the equivalent value from the Azure portal) to automatically configure the intended CIDRS for your cluster. However, any additional CIDR after that point would require the administrator to configure a manual entry via the Azure portal or Azure-CLI.

Note: Kubenet mode is sometimes referred to as “Basic” in Microsoft documentation.

Azure-CNI (Advanced networking)

Azure-CNI is another networking option that gives you the option to deploy massive clusters (Max 5000 Nodes) and implement advanced networking scenarios in your cloud environment. Azure-CNI can be configured in two modes:

- VNet mode

- Overlay mode

VNet mode

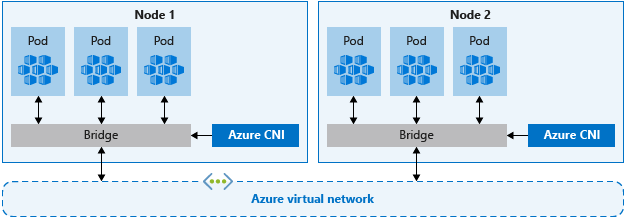

The following image illustrates the architecture of VNet mode:

The Azure-CNI (Container Networking Interface) VNet mode is considered the most performant approach to run an AKS (Azure Kubernetes Service) cluster. This mode establishes a direct connection between your Kubernetes cluster and the Azure Virtual Network (VNet), enabling efficient networking and IP address assignment for nodes and pods. On top of that VNet mode supports the deployment of Windows nodes.

It is worth noting that while VNet mode offers a lot of advantages like the best performance and multiplatform support, it requires a higher level of architectural awareness and future planning due to the way that it consumes IP Addresses that are available in a VNet. To expand this further, let’s consider a scenario where a cluster is deployed within a small CIDR range. In such cases, the scalability of that cluster will be limited by the number of IP addresses available within that CIDR range. Additionally, any other Azure resources (such as nodes, gateways, and NAT resources) deployed within the same CIDR range will further reduce the capacity to run new workloads in the cluster. If you find yourself in a situation where you need to expand your cluster but are constrained by the limited CIDR range, the only solution would be to delete the existing cluster and recreate a new one with a larger CIDR range.

Overlay mode (New)

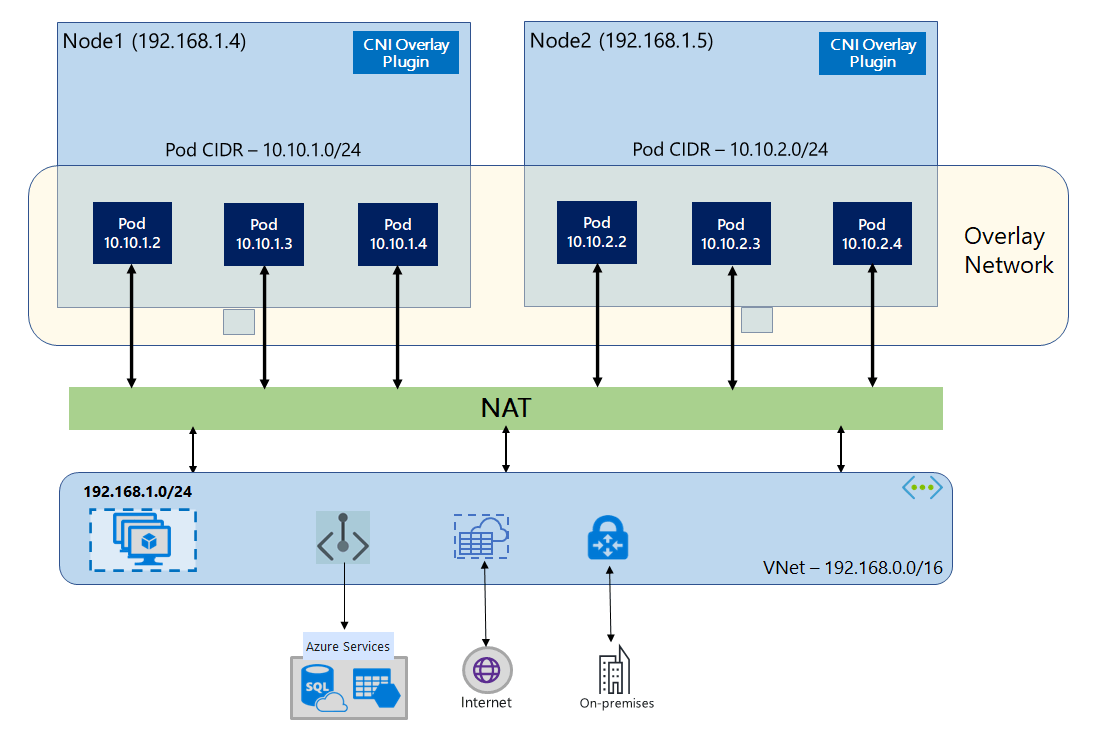

The following image illustrates the architecture of Overlay mode:

The Azure-CNI Overlay mode, which was introduced in 2023 KubeCon Europe is a new way to deploy an AKS cluster that offers scalability and performance similar to the VNet mode while introducing the long-awaited capability of using a different CIDR for the internal Kubernetes resources, which was previously only available with Kubenet. On top of that, the Overlay networking mode also supports multiplatform nodes and has official support for deploying Windows nodes.

In Overlay networking mode, Kubernetes cluster nodes are assigned IPs from the Azure VNet subnet, Pods receive IPs from a private CIDR that is provided at the time of cluster creation. Each node will be a member of a virtual machine scale set and part of a /24 CIDR.

AKS Policy enforcement (Official options)

On its own Kubenet or Azure-CNI does not enforce any network policies but both implement a way that can be combined with a network policy implementation to delegate the enforcement of network policies to another plugin. Currently, Microsoft Officially supports two policy engines, Azure and Calico.

The following picture shows the available networking plugins that are currently available in the Azure portal:

Azure network policy manager

The Azure network policy manager is the official policy engine from the Microsoft Azure team that fully supports the Kubernetes policy specification. It supports both Linux (iptables) and Windows nodes (HNS). Azure network policy manager translates policies to sets of IP pairs and then programs them by using iptables/HNS ACL policy filter rules.

Calico as a policy engine

Calico is a networking and security solution for containers, virtual machines, and native host-based workloads. Similar to Azure network policy manager Calico fully supports the Kubernetes policy specification. On top of that, Calico extends the capabilities of your cluster by implementing two unique resources (Calico network policy and Calico global network policy) with a more extensive language that can make it easier to target the intended flows and write a single policy that can secure your cluster as a whole. While the default way to implement policies is via iptables, in eBPF mode Calico can translate your policies into eBPF programs to provide more efficient network policy enforcement.

It’s important to note that in this mode, all Calico features, including the pluggable dataplane and global security policies, are fully supported and operational in AKS.

Note: Please refer to the official Microsoft documentation for a detailed list of features and limitations for Azure network policy manager and Calico. Click here to learn more.

Azure CNI Powered by Cilium (GA)

As we mentioned earlier, the default dataplane when an AKS (Azure Kubernetes Service) cluster is created is based on Linux standard routing. This default configuration utilizes iptables and kube-proxy to establish networking for the cluster. The powered by Cilium option allows you to create a cluster preconfigured with an eBPF dataplane. Currently, it is possible to create such a cluster in two modes (VNet, Overlay).

For the complete list of features and limitations please refer to Microsoft’s documentation.

The following chart illustrates Azure-CNI options that are currently available:

| Kubenet | Azure CNI | Azure CNI Powered by Cilium |

|||

| VNet | Overlay | Vnet | Overlay | ||

| Supported dataplane | IPtables * eBPF, HNS ** |

eBPF | |||

| Network Policies |

|

|

Kubernetes network policy | ||

| Limitations |

|

|

|||

| OS platforms supported | Linux only | Linux and Windows | Linux and Windows Server 2022 (Preview) | Linux only | |

* Requires Azure Network Policies or Calico to be enabled.

** Requires Calico

Azure Bring your own CNI

Bring your own CNI (BYOCNI) deploys an AKS cluster without any preconfigured CNI plugin, which is a great way to run a cluster with your desired CNI. In this mode, you are free to change and run any version of your desired CNI and configure it as you see fit. However, it is worth noting that in this mode support for third-party CNI that you install on your cluster will come down to you and the company/community that owns that CNI project.

An AKS cluster configured with BYOCNI method will give you the most flexible and scalable deployment that you can achieve for your cloud environment. This approach allows you to use your desired CNI, thereby benefiting from the latest features and security improvements that it offers.

For example, similar to the Azure-CNI in the Overlay mode, BYOCNI allows you to expand your cluster by using a private CIDR, the difference is that in a BYOCNI scenario you are in charge of every part of your network implementation and you will have access to the full feature set that your desired CNI solution provides. This combination allows you to shape your cloud-native environment in the most efficient way for your scenario.

Conclusion

In conclusion, the Azure-CNI overlay mode stands out as the preferred approach for deploying large Kubernetes clusters. Its versatility allows it to accommodate various requirements, making it a compelling choice for different use cases.

Deploying a cluster with a preconfigured CNI offers the advantage of official support from Microsoft. However, it comes with trade-offs as it may impose restrictions on CNI plugin upgrades, patch releases, and supported features. These limitations can hinder the utilization of the full capabilities provided by the desired CNI solution.

On the other hand, with BYOCNI you can have the most flexibility. This setup, when paired with a capable CNI provides the necessary tools to leverage every aspect of the cloud provider capabilities to build a cluster environment tuned for your needs. For example, Calico pluggable dataplane architecture allows you to leverage high-performance eBPF dataplane or the reliable Standard Linux routing to have the most efficient networking for your cluster and security features such as Calico WireGuard integration and application layer policy to implement service mesh and secure your application on every level of the OSI model. The flexibility of this approach empowers users to optimize their AKS clusters according to their specific networking, security requirements, and preferences, making it an attractive trade-off for those seeking customization and full control over their Kubernetes infrastructure.

Ready to try Calico for yourself? Get started with a free Calico Cloud trial.

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!