Kubernetes is an excellent solution for building a flexible and scalable infrastructure to run dynamic workloads. However, as our cluster expands, we might face the inevitable situation of scaling and managing multiple clusters concurrently. This notion can introduce a lot of complexity for our day-to-day workload maintenance and adds difficulty to keep all our policies and services up to date in all environments. In such an environment, cluster mesh can establish seamless connectivity between these clusters and integrate workloads in a unified networking environment.

A cluster mesh is a great way to link independent Kubernetes clusters and provide connectivity between resources in different clusters to provide fault tolerance and high availability beyond what is possible in a single cluster scenario.

In this blog post, we will guide you through the required steps to build a multi-cluster environment and establish a cluster mesh, utilizing the versatile capabilities of Calico Open Source. We’ll explore different methods such as Top of the Rack (TOR) and overlay to set up a cluster mesh, addressing unique networking challenges posed by diverse environments. This is possible since Calico offers multiple approaches to establish a multi-cluster environment, providing the flexibility to align with your networking infrastructure and specific requirements. Additionally, we’ll shed light on incorporating DNS connectivity to enhance inter-cluster communication.

As your cluster-mesh environment scales we will discuss the next steps involving federation and multi-cluster management with Calico Enterprise and venture into federated clusters. We’ll showcase how Calico provides a multi-cluster management plane, allowing seamless security implementation and observability across clusters.

Finally, we’ll touch upon how Calico Enterprise federated identities can bridge the gap in a multi-cluster environment, providing a unified way to tailor network policies that can reference resources from different clusters and build services to load balance requests across cluster borders.

What is a cluster mesh?

A cluster mesh connects internal resources within two or more independent clusters. Typically, each Kubernetes cluster will have private IP addresses assigned to internal resources that are not visible to any external entities unless a nodeport or a loadbalancer service is associated with them. However, exposing resources to everyone can be a security risk and undermine the zero-trust security posture that you should keep to secure your environment. On top of that, it can jeopardize your whole environment by allowing malicious users to exploit a vulnerability and get a foothold in your crucial services.

Establishing a cluster mesh within a multi-cluster environment presents a secure mechanism for facilitating direct communication between clusters. This communication can occur through designated cluster services or private IP addresses, ensuring a robust and controlled interaction while mitigating the risks of exposing internal resources to the broader network.

How to build a multi-cluster environment and establish a cluster mesh

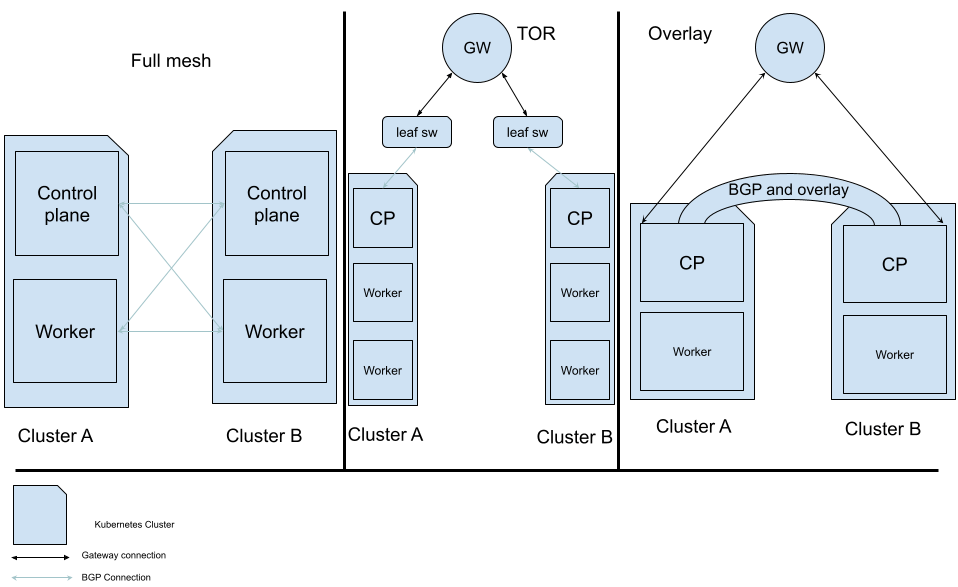

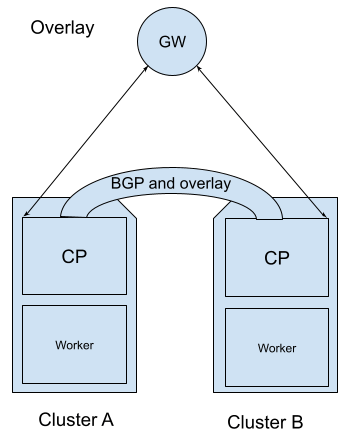

The following picture illustrates how Calico can set up cluster-mesh connectivity between your clusters.

Calico provides different ways that you can use to build a multi-cluster environment providing flexibility to align with your networking infrastructure and requirements. The best part about Calico is that after joining two clusters together it will automatically provide the cluster mesh and you can start using resources in different clusters by using their internal cluster services and IPs.

Cluster mesh in a flat networking environment

The configuration of a cluster mesh can vary depending on the underlying infrastructure, but its fundamental purpose remains the same and in essence, it is deployed to establish connectivity between standalone clusters.

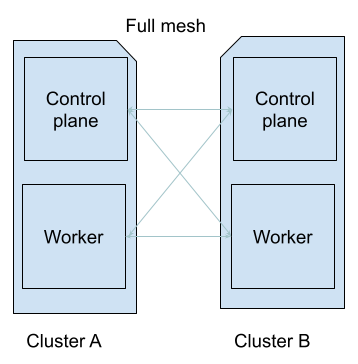

As an example, in an environment where participating nodes of our clusters are connected directly via a broadcast domain, we can quickly establish a mesh with a routing protocol that propagates internal routes to external entities. This will allow us to advertise internal routes without exposing them via a nodeport service to our intended services.

The following image illustrates a common design for flat network:

Consider viewing our video, BGP for Kubernetes with Calico open-source, for a comprehensive tutorial on deploying a cluster mesh using the full-mesh method.

Cluster mesh in an enterprise or cloud networking environment

In a complex networking environment such as the cloud or an enterprise network, underlying infrastructure resources that form the cluster are usually separated into individual broadcast domains. This limitation arises because each entity within these domains is mandated to traverse through a gateway to reach its intended destination. However, by default, this additional hop (the gateway) needs to gain awareness of the internal cluster resources that we create within our Kubernetes clusters. Consequently, the gateway drops packets bound for these internal resources, rendering simple routing methods inadequate.

Now that we know about the problem, let’s review how Calico can be utilized to solve such an issue.

In order to establish a cluster mesh in such an environment we can use two methods:

- Top of the rack (TOR)

- Overlay

TOR method (Recommended)

In an enterprise or cloud environment, resources are typically interconnected through an intermediary gateway. We usually recommend TOR to our customers since building a route propagation mechanism with the cloud provider automatically makes your cluster aware of all cloud resources that are under your supervision. This also plays a role in your cluster being highly available via the underlying network infrastructure that cloud providers.

Suppose you can configure the cloud gateway and pair it with a robust Container Network Interface (CNI) like Calico. In that case, you can utilize BGP routing to establish a cluster mesh. Calico BGP integration offers dynamic routing, allowing you to propagate internal pod and cluster IP routes to other resources in your network infrastructure.

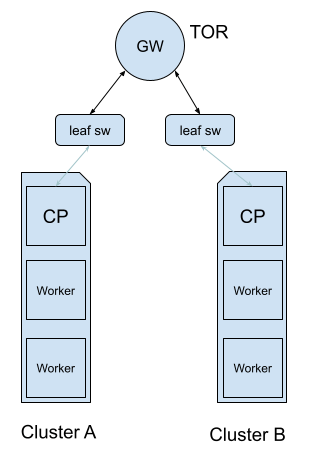

The following image illustrates a common design for a BGP capable cloud environment:

In many scenarios, the TOR (Top of Rack) enhances high availability and fault tolerance. This approach optimizes network traffic distribution, mitigates single points of failure, and further fortifies the reliability and resilience of your infrastructure.

Note: View this tutorial for a comprehensive guide on deploying a cluster mesh using the TOR method.

IPIP overlay

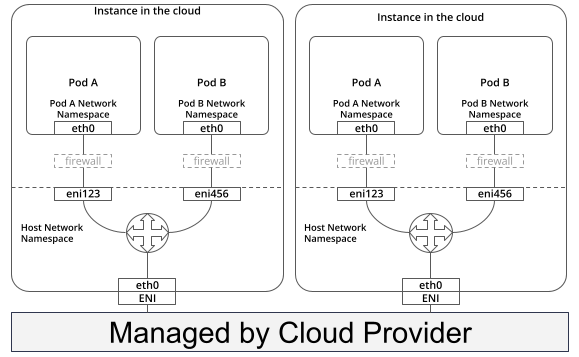

In some cases, you might not be able to access your gateway to modify its settings, or it might not support BGP peering. In such a scenario, you can use an IPIP overlay to encapsulate the traffic to your destination cluster. An overlay network allows network devices to communicate across an underlying network (referred to as the underlay) without the underlay network having any knowledge of the devices connected to the overlay network.

Note: Click here if you would like to learn more about overlay networks.

VXLAN overlay (Calico Enterprise)

The upcoming Calico Enterprise (3.18+) release will enable VXLAN networking in the context of multi-cluster environments. One of the notable benefits of this approach is the ability to secure cross-cluster traffic with identity-aware policy (you will learn about this in the coming federation section). Calico Enterprise, through its multi-cluster networking feature, automatically extends the overlay networking to establish pod IP routes across clusters.

In a Calico Enterprise cluster mesh setup which is using VXLAN, each cluster serves as both a local and remote cluster, with local clusters configured to retrieve endpoint and routing data from remote clusters on an encrypted channel. The VXLAN cluster mesh provides a secure, scalable and efficient solution for managing multi-cluster networking, enabling seamless communication and identity-aware policy enforcement across clusters.

Note: Click here if you would like to learn more about VXLAN overlay.

The following image illustrates a common design for a cloud environment without BGP capabilities:

Note: View this tutorial for a comprehensive guide on deploying a cluster mesh using the TOR method.

DNS connectivity

After a multi-cluster is established, clusters can communicate with each other on an IP level. However, depending on the scale of your network and the nature of Kubernetes IP addresses that can dynamically change at any time you need to implement an easier way to establish connectivity between these clusters. Domain name resolution can be a great addition to your cluster mesh allowing easier inter-cluster communication.

In most Kubernetes deployments, CoreDNS serves as the primary workload responsible for resolving domain names for the cluster. To seamlessly integrate DNS with your cluster mesh, a simple modification to your CoreDNS configmap is all that’s needed. Specifically, you’ll need to add your other clusters’ CoreDNS internal service IP as a forwarder into your configurations.

This simple adjustment enables your clusters to resolve names by sending queries to other clusters, and retrieving the corresponding IP addresses for the desired resources. This integration significantly streamlines communication within the cluster mesh, enhancing overall connectivity and making management more efficient.

Note: Consider viewing this video for a comprehensive tutorial to learn about DNS connectivity.

Federation and multi-cluster management (The next step)

As your environment scales, you may encounter scenarios where multiple teams must work in parallel across all clusters. A common issue that multicluster management (MCM) resolves is the handling of objects from different clusters, including network policies, pods, compliance reports, observability, and security logs, in a centralized manner.

While it’s technically feasible to manually create policies, network sets, and other resources via kubectl for each cluster, this approach introduces considerable complexity to your day-to-day maintenance tasks and can open an avenue for incidental credential compromisation. Moreover, similar to what we explored in the previous section, it mirrors the challenges associated with not having a well-structured multi-cluster environment, particularly in the context of cluster management but from a networking, troubleshooting and observability perspective.

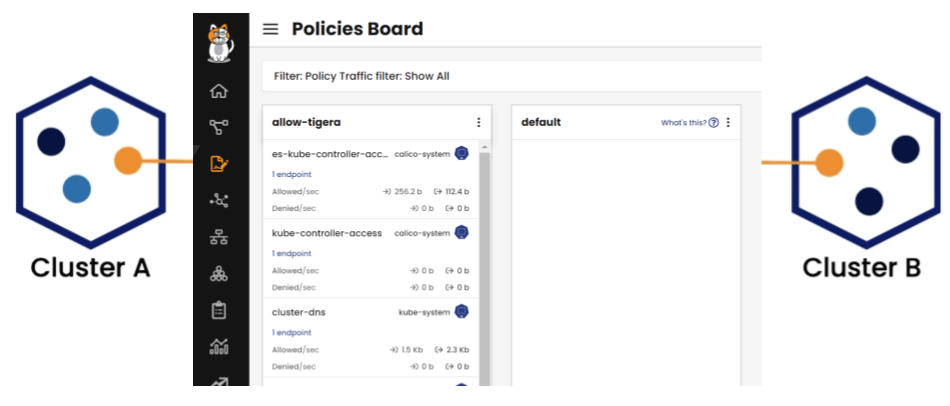

Multi-cluster management using Calico Enterprise

Calico Enterprise provides an MCM plane to enable security and observability across multiple clusters by establishing a secure connection between these clusters. This architecture also supports the federation of network policy resources across clusters and lays the foundation for true centralized management, observability and maintenance of your clusters.

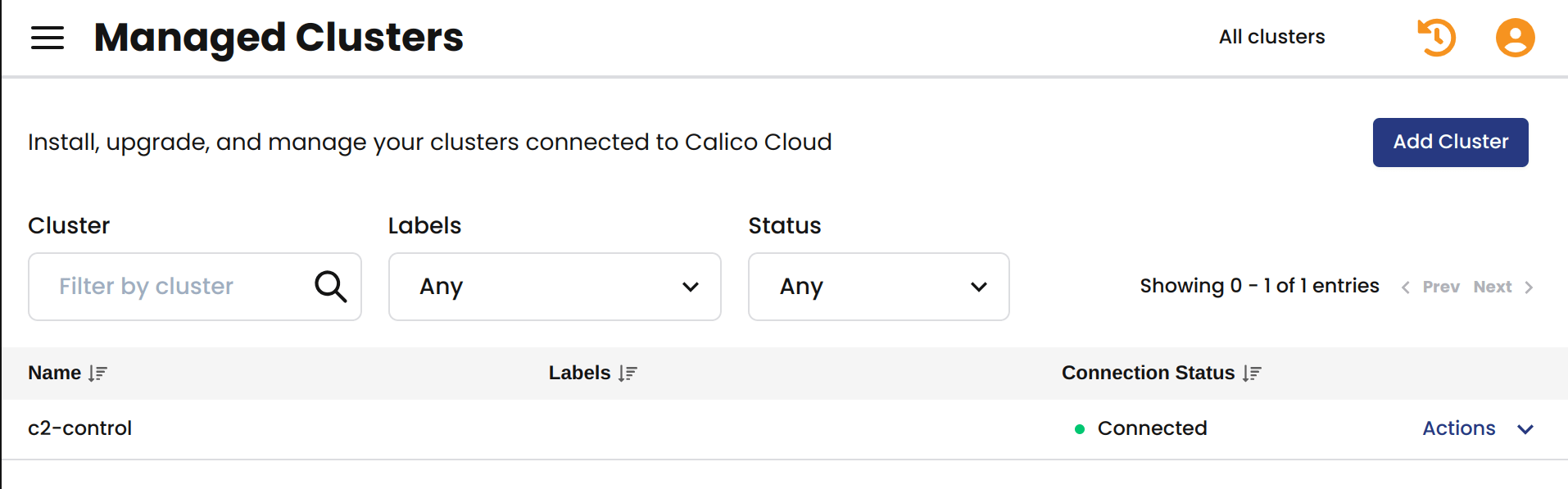

The following image is an example of the MCM page that lists all the connected clusters.

MCM security features are not limited to the network side of your cluster. Since MCM is fully integrated with Kubernetes role-based access control (RBAC), you can craft authorizations that allow users to view only the information that they need to see.

Note: Use this hands-on workshop to learn more about multi-cluster management.

Federated identities and unified policy enforcement using Calico Enterprise

Calico Enterprise federation associates workload and service endpoints with unique identities shared between clusters.

Federated identities can be associated with network security policies to craft unique resources referencing endpoints residing in different clusters, allowing for seamless control over inter-cluster security.

Additionally, through federated services, you gain the ability to discover and interact with remote pods residing in different clusters. These two key features enable the creation of precise, fine-grained security controls, fortifying the overall security posture across multiple clusters.

By implementing federated tiers and policies, you have the flexibility to define network security policies that can either apply universally across all clusters or specifically target a defined group of clusters. This approach offers an effective means to scale security measures as you expand your deployment to include multiple clusters. By extending these security controls to both existing and new clusters, you effectively minimize the duplication of policies and streamline the management of security measures, simplifying the entire process from creation to maintenance.

Conclusion

To summarize, a cluster mesh connects internal resources across different Kubernetes clusters. Calico, with its flexible cluster-mesh setup provides the building block to set up such a connection to multiple clusters in any environment.

Calico Enterprise MCM, Federation and federated policy enforcement emerge as a missing link for a multi-cluster architecture, allowing seamless communication while prioritizing security. Calico’s capabilities in this realm allow you to seamlessly provide multi-cluster management, observability, federated services, and identities, thereby empowering your organization to navigate the complexities of modern networking with confidence and efficiency. As organizations continue to expand and scale, integrating these strategies will play a pivotal role in shaping the future of multi-cluster environments.

Get hands-on experience with multi-cluster management and its capabilities by completing our free, self-paced workshop.

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!