In my previous blog on Kubernetes security foundations, we discussed the growing adoption of cloud-native applications and the security challenges they present. We highlighted the limitations of traditional network firewalls in securing these applications and emphasized the importance of implementing cloud-native security policies to protect network traffic effectively.

In this blog, we will focus on one specific aspect of network security: securing egress traffic from microservices based on fully qualified domain names (FQDNs). Protecting egress traffic is crucial for ensuring the integrity and privacy of data leaving the microservices.

We will explore the challenges associated with safeguarding microservices egress traffic to destinations outside the cluster and discuss how Calico DNS logging and DNS policy can address these challenges. DNS logging allows for the collection and analysis of DNS queries made by microservices, providing valuable insights into their communication patterns and potential security risks. DNS policy enables the enforcement of granular access controls on domain names located outside the cluster, allowing only authorized requests and preventing malicious activities.

By implementing Calico DNS logging and DNS policy, organizations can gain better visibility into their microservices’ egress traffic, detect anomalies or security breaches, and establish stricter controls to protect their valuable data. This blog will provide practical demonstrations and guidance on effectively utilizing these features to enhance the security of cloud native applications. By understanding and addressing the challenges associated with microservices egress traffic, organizations can strengthen the overall security of their cloud native environments and protect sensitive information from potential threats.

Ingress and egress

By default, Kubernetes enables unrestricted communication between pods within a cluster and external resources, unless specific network policies are in place. In the absence of network policies, pods can freely interact with each other and external services. Kubernetes employs network policies to govern the flow of incoming (ingress) and outgoing (egress) network traffic to and from pods. These policies enable administrators to define rules that govern the communication between pods and external resources. Securing network traffic primarily involves configuring ingress and egress rules. In the context of Kubernetes pods, ingress denotes incoming traffic directed at a pod, while egress refers to outgoing traffic originating from the pod.

Egress access control needs and considerations

Labels and selectors, in conjunction with IP block CIDRs, are fundamental components for enforcing ingress rules that apply to all traffic sources. These mechanisms are equally important for defining egress rules that govern traffic within the cluster. However, the implementation of egress rules targeting traffic directed outside the cluster necessitates the adoption of rules based on destination domain names. This approach is critical for several reasons and comes with a number of considerations. Let’s review some of these reasons and considerations.

While DNS policy may appear simple at first glance, achieving a robust implementation requires careful consideration and meticulous execution. For example, applications developed in Java are infamous for disregarding DNS TTL, which can complicate DNS policy implementation. To overcome such obstacles, it is crucial to design and implement a resilient DNS policy solution.

The Importance of DNS Policy

- As Kubernetes continues to establish itself as the industry standard for managing microservices, it is essential to recognize that not all applications can be easily containerized. There are instances where pods within a Kubernetes cluster need to connect with legacy applications that are not suitable for containerization and are located outside the cluster.

- Pods may require connectivity to SaaS services, where the IP addresses of these services are beyond our control and subject to change. This becomes particularly crucial as companies increasingly adopt cloud services and need to establish connections with various cloud-based services, such as storage accounts, DB services, public repositories, container registries, and more.

- A company’s operational policy may allow stateless applications in Kubernetes while imposing restrictions on the deployment of stateful applications.

Challenges in implementing DNS Policy

- Kubernetes network policy does not inherently support defining policies based on DNS names. To overcome this limitation, a policy engine such as Calico Enterprise or Calico Cloud is necessary to implement security policies based on DNS names.

- While most engineers have a good understanding of an application’s ingress connectivity requirements, they often lack awareness of the application’s egress connectivity requirements, particularly in cloud environments. In such environments, an application may need to connect to numerous destination domain names through proxy services.

Calico DNS observability: DNS information gathering

Calico enhances observability within Kubernetes clusters by deploying agents on each cluster node that actively monitor DNS requests and responses, capturing and logging the data in a designated location: /var/log/calico/dnslogs on the relevant nodes. In addition to the DNS monitoring agents, Calico also deploys a log shipping agent (fluentd) on each cluster node. The fluentd agent facilitates the secure transmission of DNS logs from the nodes to a centralized Elasticsearch instance.

The captured DNS logs play a crucial role in implementing DNS policies, particularly when the policy implementer lacks comprehensive knowledge of the destination domain names that pods need to connect to. These logs provide essential information for understanding the application’s egress connectivity requirements, enabling the effective configuration and enforcement of DNS policies. The DNS logs are available through the following Calico observability features.

- Dynamic Service and Threat Graph

- DNS dashboard (via Kibana)

Calico DNS logs provide comprehensive metadata on name resolution activities within the cluster, including the source of the request, the DNS server responding to the request, name resolution query, latency information, and more. These logs serve as invaluable sources of information for observability, troubleshooting, and policy development, enabling you to gain insights into the cluster’s DNS behavior and make informed decisions. For a more in-depth understanding of Calico DNS logging and observability, refer to this informative blog post, DNS observability and troubleshooting for Kubernetes and containers with Calico.

Here is a sample Calico DNS log record for reference:

start_time: '2023-05-29T19:00:05.488280546Z'

end_time: '2023-05-29T19:05:15.940597425Z'

type: log

count: 1

client_name: '-'

client_name_aggr: compliance-benchmarker-*

client_namespace: tigera-compliance

client_ip: null

client_labels:

app.kubernetes.io/name: compliance-benchmarker

controller-revision-hash: 559d4c4596

k8s-app: compliance-benchmarker

pod-template-generation: '1'

projectcalico.org/namespace: tigera-compliance

projectcalico.org/orchestrator: k8s

projectcalico.org/serviceaccount: tigera-compliance-benchmarker

servers:

- name: coredns-787d4945fb-5w5rs

name_aggr: coredns-787d4945fb-*

namespace: kube-system

ip: 100.64.0.97

qname: >-

tigera-secure-es-gateway-http.tigera-elasticsearch.svc.tigera-compliance.svc.cluster.local

qclass: IN

qtype: A

rcode: NXDomain

rrsets:

- name: cluster.local

class: IN

type: SOA

rdata:

- >-

ns.dns.cluster.local hostmaster.cluster.local 1685387031 7200 1800 86400

30

- name: ''

class: 1232

type: OPT

rdata:

- ''

latency:

count: 1

mean: 196000

max: 196000

latency_count: 1

latency_mean: 196000

latency_max: 196000

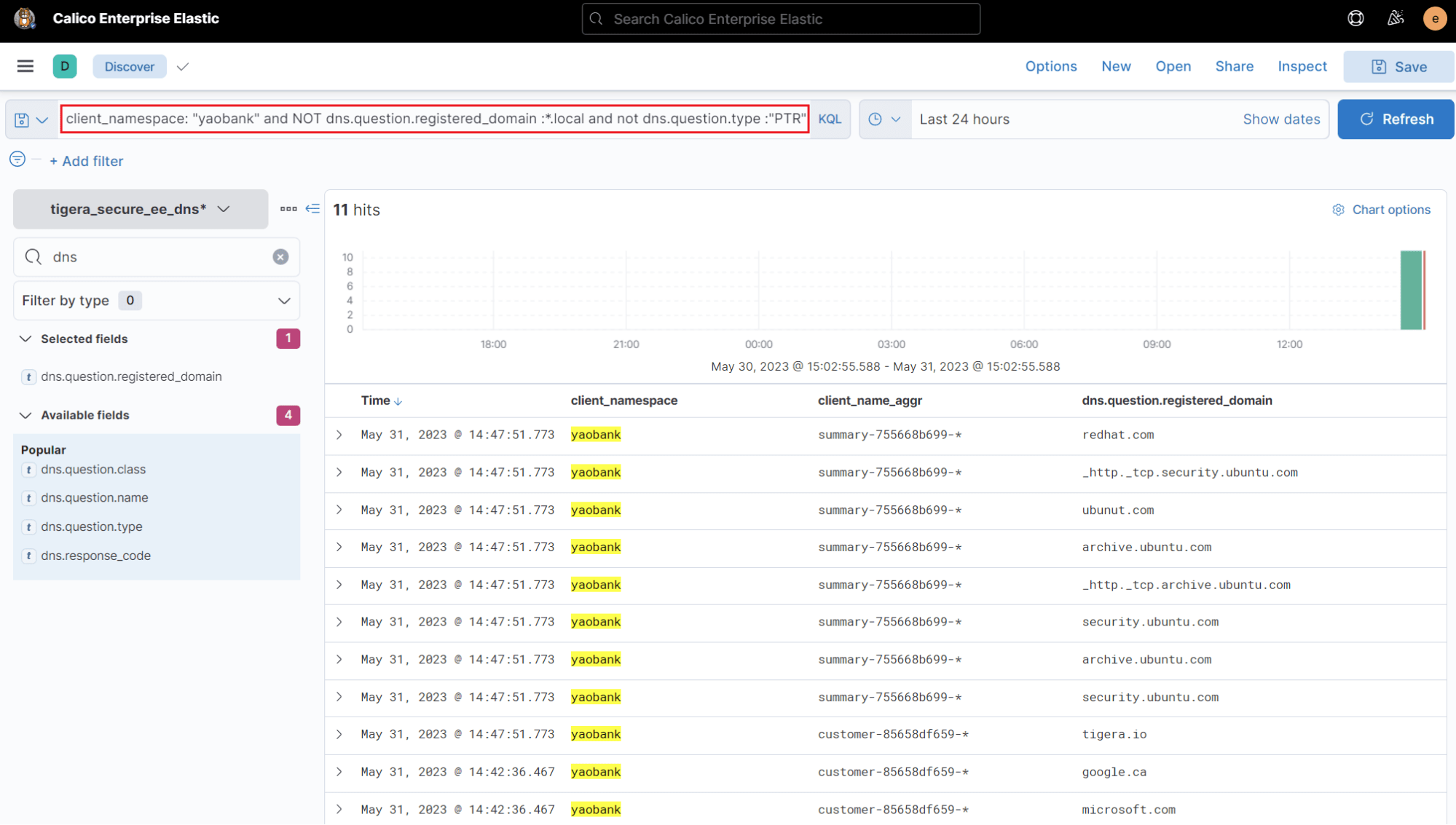

Calico DNS logs provide a convenient way to gather information on destination domains located outside the cluster. To illustrate this, the image below demonstrates how to filter the above sample DNS log using specific parameters in Calico DNS logs:

client_namespace: yaobank– filters the logs based on the source namespace.not dns.question.registered_domain : *.local– filters out any domain name that does not end with .local. This filter excludes name resolutions for resources within the cluster.not dns.question.type :"ptr"– filters out DNS PTR records.

By leveraging DNS logs and applying these filters, you can efficiently identify and analyze the pertinent DNS names, enabling you to easily reference them in your security policy.

DNS Policy Implementation

After identifying the destination domains necessary for our DNS policy, we can proceed to construct the policy accordingly. However, before diving into specific examples of Calico DNS policies, it is essential to understand the fundamental prerequisites that should be taken into account when formulating such policies. Here are some key considerations to keep in mind when deploying Calico DNS policies:

- Using domain names in policy rules is limited to only egress allow rules and not deny rules.

- Calico employs trusted DNS servers to ensure improved security by preventing malicious workloads from using fake DNS servers to manipulate domain names in policy rules. By relying only on trusted DNS information, Calico safeguards against potential hijacking attempts and maintains the integrity of DNS resolution.

- For more information regarding dnsTrustedServers and its configurations, visit Calico felix resource configuration here.

- The supported DNS types are: A, AAAA, and CNAME records.

- Domain names must be exact matches in Calico DNS policies. This means that a domain like “www.tigera.io” is considered distinct from “www.www.tigera.io“.

- Find additional information by visiting this documentation on domain name matching.

- Calico DNS policy enforcement can be applied to both workload endpoints (pods) and host endpoints (nodes). If you wish to implement DNS policies for nodes, it is required to include your nodes’ DNS server in the dnsTrustedServers list. To learn more about Calico host endpoint protection, you can explore the following links for detailed information.

- DNS policy is supported by both Calico

networkpolicyandglobalnetworkpolicy. If you intend to implement DNS policy for cluster nodes, you must useglobalnetworkpolicysince cluster nodes are not namespaced resources. To learn more about Caliconetworkpolicyandglobalnetworkpolicy, visit the following links:

Now that we understand the DNS policy requirements, let’s get hands-on and use Calico DNS policy. I am using a sample application for a banking service called ‘yaobank’ (yet another online bank) which is built using microservices. The following policy allows “customer” deployment in yaobank namespace to make egress connection to the following domain names:

Note: In order for pods to make name resolution requests, they must have egress access to the coredns pods in the cluster as implemented in the policy below. This policy rule could be implemented through a dedicated security policy for the whole cluster.

apiVersion: projectcalico.org/v3

kind: NetworkPolicy

metadata:

name: default.customer-dns-policy

namespace: yaobank

spec:

tier: default

order: 0

selector: app == "customer"

egress:

- action: Allow

protocol: UDP

source: {}

destination:

ports:

- '53'

- action: Allow

protocol: TCP

source: {}

destination:

ports:

- '80'

- '443'

domains:

- www.www.tigera.io

- '*.microsoft.com'

types:

- Egress

DNS Policy Validation

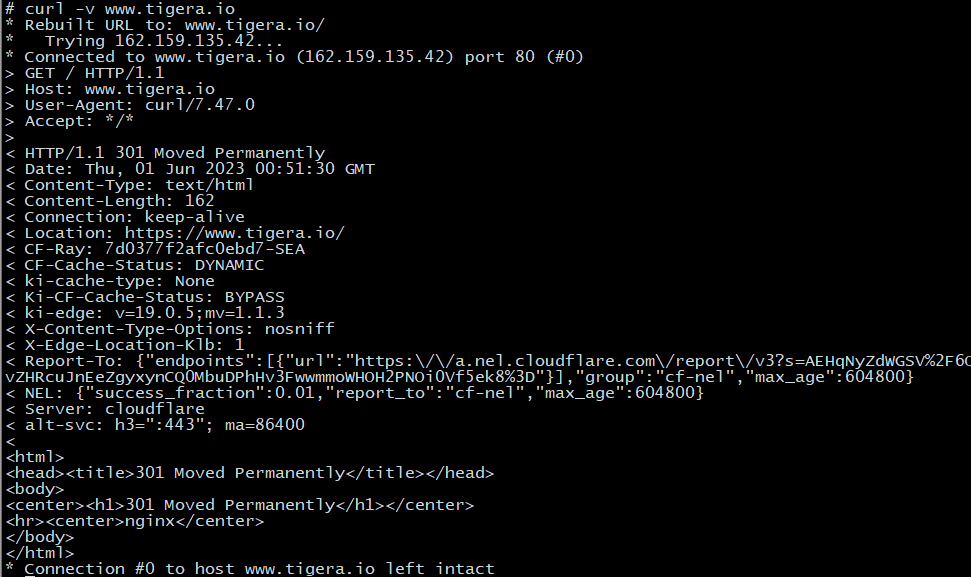

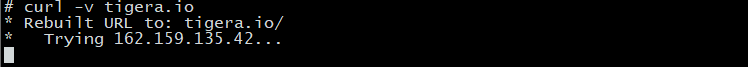

After deploying a DNS policy, we can verify pod connectivity to the specified domain names using the curl command. It is important to note that Calico DNS policies require exact matches for domain names. While we successfully connect to www.www.tigera.io when curling, our connection to www.tigera.io should fail as depicted in the following images.

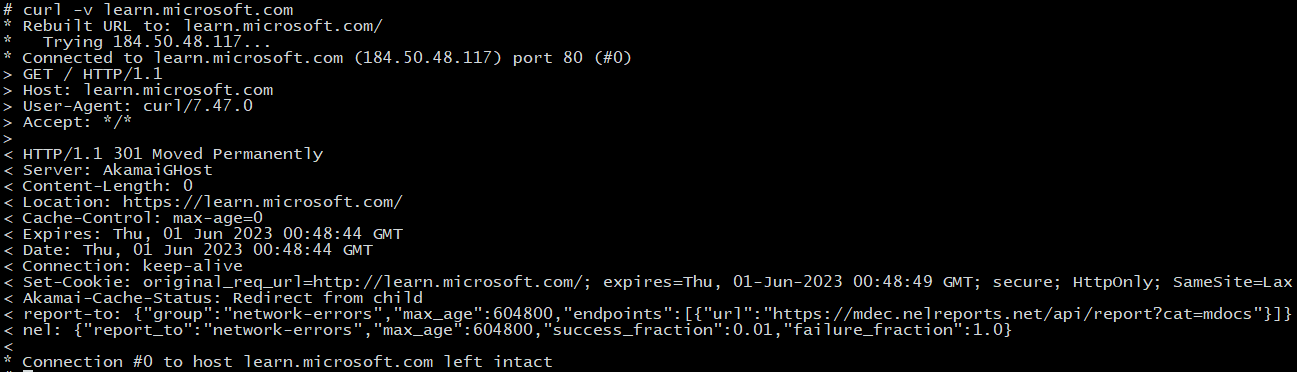

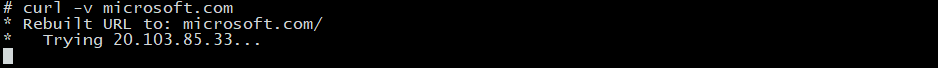

The same principle applies to connections with Microsoft domains. Connections to microsoft.com are expected to fail, while connections to any Microsoft subdomain should succeed as demonstrated below.

Troubleshooting connectivity issues with Calico observability

The distributed and dynamic nature of Kubernetes presents significant challenges when it comes to identifying the root cause of connectivity issues. However, by implementing effective observability measures with tools like Calico, we can overcome these challenges. Calico observability allows systems engineers to monitor network traffic, collect performance metrics, analyze network flows, and gain real-time visibility into the system connectivity. This enables the timely detection of anomalies, identification of bottlenecks, and tracing of connectivity issues back to their source. Let’s utilize Calico observability to pinpoint the root cause of connectivity issues in our DNS policy.

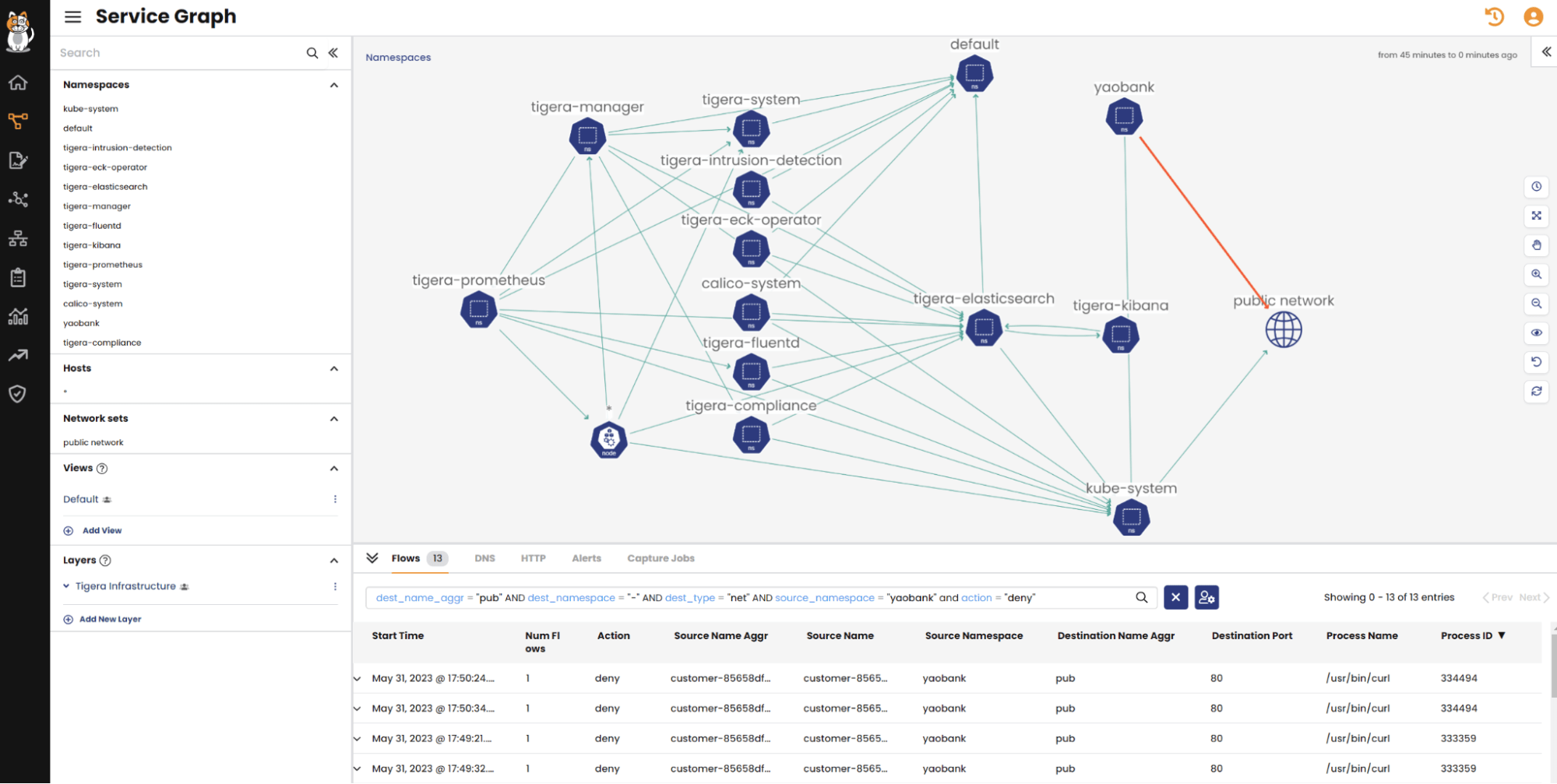

As seen in the image below, Calico’s Dynamic Service and Threat Graph provides a visual representation of the network interactions between microservices. A closer examination of the screenshot reveals disruptions in the connections (red link) between the yaobank namespace and the Internet (public network). To identify the root cause, we can analyze the relevant flow logs collected from the link connecting the yaobank namespace to the public network. Calico observability is empowered by Calico flow logs, providing a comprehensive view of connection details. In this particular case, the flow logs indicate that the connection from the “customer” deployment to the “www.tigera.io” domain on port 80 is being blocked by the security policy named “customer-dns-policy”. Additionally, flow logs offer valuable insights beyond connectivity issues, such as granular information about the process and its arguments used to establish the connection, TCP statistics, and more, as exemplified in the following flow logs.

{

"start_time": "May 31, 2023 @ 17:44:47.000",

"end_time": "May 31, 2023 @ 17:44:58.000",

"source_ip": "100.64.1.103",

"source_name": "customer-85658df659-2c7p6",

"source_name_aggr": "customer-85658df659-*",

"source_namespace": "yaobank",

"nat_outgoing_ports": null,

"source_port": null,

"source_type": "wep",

"source_labels": [

"app=customer",

"pod-template-hash=85658df659",

"version=v1"],

"dest_ip": "162.159.135.42",

"dest_name": "-",

"dest_name_aggr": "pub",

"dest_namespace": "-",

"dest_port": 80,

"dest_type": "net",

"dest_labels": [],

"dest_service_namespace": "-",

"dest_service_name": "-",

"dest_service_port": "-",

"dest_service_port_num": null,

"dest_domains": ["www.tigera.io"],

"proto": "tcp",

"action": "deny",

"reporter": "src",

"policies": ["0|default|yaobank/default.customer-dns-policy|deny|-1"],

"bytes_in": 0,

"bytes_out": 60,

"num_flows": 1,

"num_flows_started": 0,

"num_flows_completed": 1,

"packets_in": 0,

"packets_out": 1,

"http_requests_allowed_in": 0,

"http_requests_denied_in": 0,

"process_name": "/usr/bin/curl",

"num_process_names": 1,

"process_id": "327791",

"num_process_ids": 1,

"process_args": ["-sw %{http_code} www.tigera.io"],

"num_process_args": 1,

"original_source_ips": null,

"num_original_source_ips": 0,

"tcp_mean_send_congestion_window": 0,

"tcp_min_send_congestion_window": 0,

"tcp_mean_smooth_rtt": 0,

"tcp_max_smooth_rtt": 0,

"tcp_mean_min_rtt": 0,

"tcp_max_min_rtt": 0,

"tcp_mean_mss": 0,

"tcp_min_mss": 0,

"tcp_total_retransmissions": 0,

"tcp_lost_packets": 0,

"tcp_unrecovered_to": 0,

"host": "worker2",

"@timestamp": 1685580298000

}

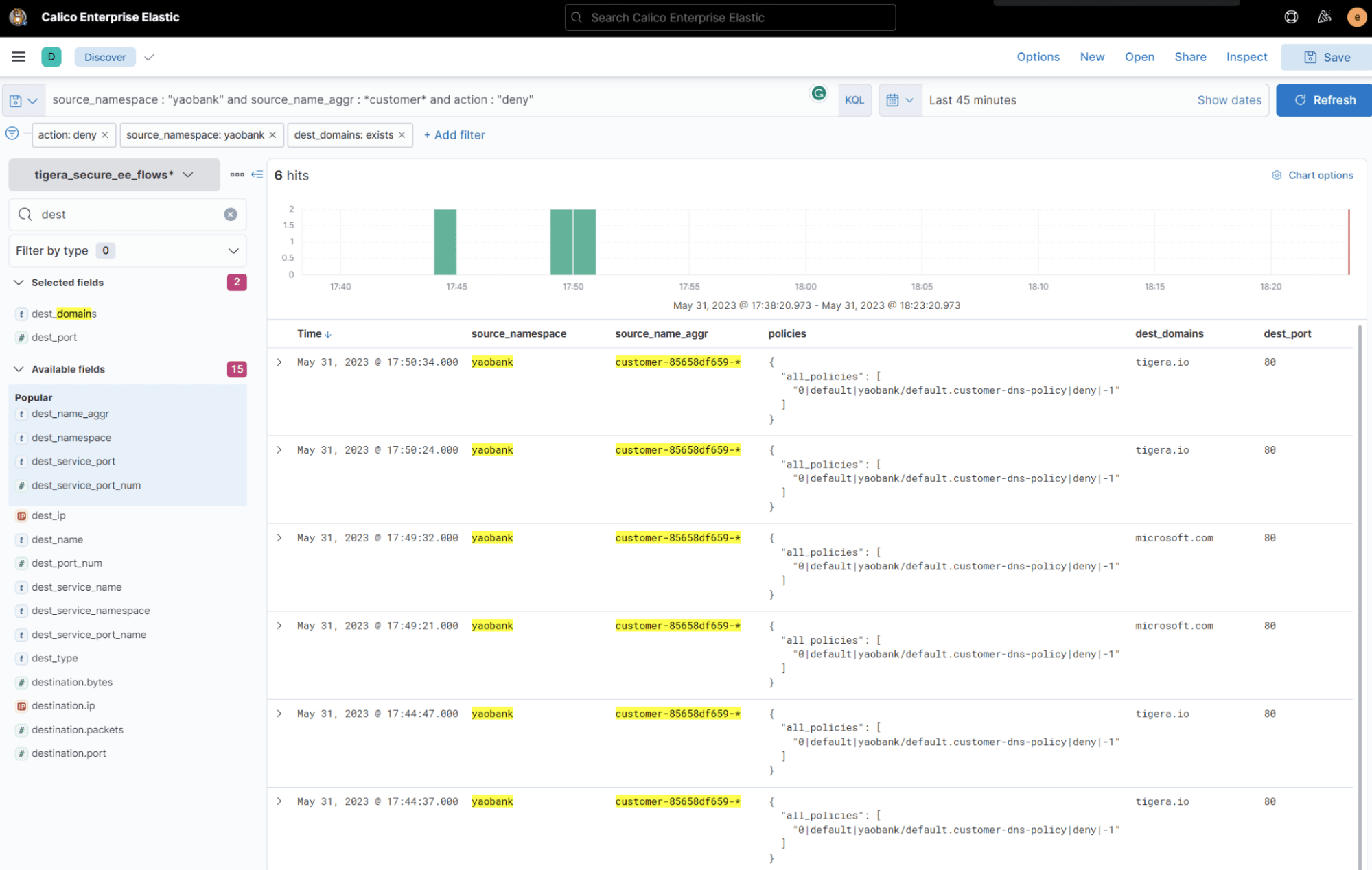

Just like the Service Graph, Calico logging dashboards provide a convenient way to filter and analyze connection information. The screenshot below demonstrates a filtered view of Calico flow logs, focusing on denied connections originating from the “customer” deployment in the yaobank namespace and directed towards the Internet. By utilizing Calico logging dashboards, it becomes effortless to quickly identify pertinent denied connections, enhancing visibility and simplifying the troubleshooting process.

Summary

DNS policy is an effective method for managing the egress access of Kubernetes workloads to external resources, including SaaS services and legacy workloads outside the cluster. By implementing DNS policy for applications, DevOps, application teams gain precise control over their access. However, it is worth noting that identifying the exact external destination domain names can often present a challenge, as operators may lack comprehensive knowledge of them. Calico DNS policy stands as a reliable and efficient solution for enabling egress access from pods to destinations beyond the cluster. By utilizing Calico DNS policy features, DevOps teams can guarantee secure and seamless communication between Kubernetes workloads and external resources.

Ready to get started? Try Calico Cloud for free.

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!