Hi all! I am a part of the architecture team at Avito.ru, one of the world’s top classifieds (read more about Avito here). In this post I want to share our experience in implementing Kubernetes at scale.

Kubernetes is a powerful orchestration tool that helps us manage dozens of microservices, support robust and fast deploy. It’s really cool that we don’t have to manage resources manually, think about service discovery and so on. However, it is not an ideal solution and we’ve encountered a number of problems while implementing it. Here I want to share some of the issues and suggested workarounds. First of all, it’s important to highlight that we run а self-hosted installation of Kubernetes. We use calico for network setup. We have multiple versions of Kubernetes: 1.5, 1.6, and 1.8.

Slow scheduling

We have several environments; and for each environment we have a separate Kubernetes cluster — one development and two production clusters. In the development cluster, we test our software (monolithic app, microservices, crons, and so on). For now, we have roughly 10 deploys per minute and thousands of pods deployed. With the number of containers in cluster growing, we faced the problem of slow scheduling. 70 pods deployed on the same node was sufficient for this problem to arise — each successive deployment took minutes. Slow scheduling is not an issue in a production environment, where we deal with high loads and CPU utilization and therefore don’t need more than 70 pods on each node.

However, in the dev cluster, we run multiple microservice instances and they are not resource-intensive. We want to manage server resources rationally. Our solution was a straightforward one — we’ve installed six KVM virtual machines on each physical server. Each virtual machine is an isolated Kubernetes node with kubelet, kube-proxy, docker-daemon, and other software installed. Moreover, we’ve cut the number of pods that can be scheduled to the same node down to 60. As a result, we have 360 pods per physical server without any scheduling problems.

CPU resources limits overhead

Before Kubernetes, we ran LXC and some of its services were migrated to Kubernetes without any codebase changes. One interesting thing we noticed was that their performance in k8s was lower than in LXC. Even computationally intensive services demonstrated poorer performance at the same CPU utilization rates.

One problem was discovered in linux kernel setup. New servers, were using the default governor mode: powersave. Once we switched it to performance mode, productivity immediately improved. Still, we were experiencing problems (slow response and high latency) with the majority of the services. After some experiments, we found that the main reason was low CPU limit. For example, for a basic python service with 1 CPU limit, the response time was 100 msec in the 99th percentile. After removing the CPU limit, the response time reduced to 10 msec. CPU utilization rate remained the same (much lower than the limit), and we had sufficient resources for scaling. Thus, there remains a safety margin before the CPU limit is reached. Even if the CPU limit is set twice as high as the app’s normal load, it still can slow the app down. If the CPU limit is to ensure better performance, set it at 1,5x the app’s normal load or higher.

Zero-downtime deploy with ingress

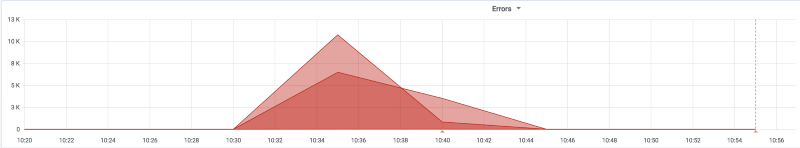

Errors while service deployment

Proper service configuration remains one of the major challenges for us. When we deployed a pre-configured service, some requests were failing during an update. In the dev and production clusters, we use native nginx-based ingress controller. The ingress mechanism is described in detail here.

In the current implementation, nginx upstreams have servers pointing to Kubernetes endpoints by default. The ingress controller “listens” to events and reloads its config with zero downtime. But let’s dig deeper into the rolling update process. Kubernetes sends SIGTERM signal to each first process in the container associated with the pod and reports this to Kubernetes API. As these processes run in parallel, some of the services may have already been stopped, but traffic from current upstreams in ingress is still sent to the pod. Even if you handle all connections in a proper way by shutting them down, the service won’t accept any new connections because it has already received the SIGTERM signal. So, here is a workaround for this issue. A dedicated logic can be implemented in the service to asynchronously switch to the sleep mode for several seconds and only then stop accepting new connections. Likewise, it can be done with a specialized Kubernetes pod — pre stop hook. The process will receive SIGTERM signal only when the pre stop hook has been completed. For example, you can set the sleep to 30 seconds. 30 seconds significantly prolongs deployment, but in most cases it suffices to achieve zero downtime. Of course, you can adjust the time based on your specific case.

Helm updates

Helm is a package manager for Kubernetes. We use helm for service deployment. It has many functions, such as environment management, waiting for resources during deployment, computing the deployment result, and many others. Architecturally, it is built of two binaries: helm client and tiller server. All release data is stored in Kubernetes configmaps.

Helm is still under active development, and new versions are released quite often. Each release comes with multiple useful features added. When we update the tiller, we want to do it without deployment downtime and any side effects of the new version. So here we use a small trick that helps us deploy the new tiller without running into problems. In most cases, the release format in configmaps remains the same (or is backward compatible). So the easiest way to upgrade the tiller is to deploy it to a different Kubernetes namespace and add reference to the older tiller configmaps. Then you can easily migrate to the new version of the tiller by configuring the tiller-namespace option in helm client. Even if something goes wrong, you can simply change the tiller-namespace in the client without tiller rollback.

Network issues

We’ve experienced some network issues, such as high latency and package drops. Ultimately, the root of the problem is linux core setup, rather than Kubernetes as such. Kubernetes performance strongly depends on linux core performance. You definitely need to check CPU frequency governor mode.

Following fine adjustments are optional, but we recommend trying them out if you are experiencing network problems.

- Assign interrupts to CPU cores. It helps to allocate several physical cores to network operations without competing with other processes.

- Increase RX ring buffer.

- If you use KVM virtualization for kubernetes nodes, check your network adapter. We use virtio, it performs well.

- Increase the size of network buffers in linux core, number of queues in NIC, and set up coalesce.

Local development performance

During the past year, we have been running the default installation of minikube with VirtualBox and vboxfs for sharing folders with code. We have several hundred developers on our team, the majority of them are running Mac OS and some are working in Linux (Arch, ubuntu, etc.). We still have a large monolithic app interacting with microservices. For local development, we use the same tools as for production: Kubernetes for container orchestration and helm for environment management and deploy. That’s why we chose minikube — an easy one-click installation tool for local single-node Kubernetes clusters with cross–platform support.

During the implementation of minikube for local development, we had to run 16 pods and approximately 30 containers. The monolithic app and related services were built as a helm chart. There was only one problem: after local system deployment, the laptop was freezing. With the CPU running at 300% and 4Gb of RAM used, it was not a wise solution. The first idea was to export all big pods, such as Postgres databases and the search system, to a remote cluster. After that, we could run them on 8-core workstations with 16Gb RAM. In other words, the system could only run on a high-performance workstation. Even if the deployment went well, the response time of the website was several seconds, and it was not what we wanted. We tried a different hypervisor. We chose xhyve based on hypervisor API for Mac and KVM for Linux. It reduced CPU usage to 20–50% and RAM usage to less than 1Gb, which was acceptable. But the website was still taking about 5 seconds to load. We found out that the root cause of the problem was in the stat syscalls whose performance was very poor. This time NFS came to our rescue. We mounted the host directory onto the VM using NFS when starting minikube and got some really good FS call performance.

Since then, new minikube drivers have been released for hyperkit and KVM2. We will try them out soon. We do recommend switching from Vbox+vboxfs to a different hypervisor and mount system. It will free up your resources and speed up the local environment. Despite all its issues, Kubernetes provides fast and reliable deployment in any environment and is highly flexible in building a microservices architecture.

This article originated from http://medium.com/avitotech/kubernetes-issues-and-solutions-2baffe25f40b

————————————————-

Free Online Training

Access Live and On-Demand Kubernetes Tutorials

Calico Enterprise – Free Trial

Solve Common Kubernetes Roadblocks and Advance Your Enterprise Adoption

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!